【干货】HarmonyOS 鸿蒙Next我在鸿蒙next中是怎么完成录音功能+录音转文字的?

【干货】HarmonyOS 鸿蒙Next我在鸿蒙next中是怎么完成录音功能+录音转文字的?

首先,封装一个录音部分的Manager和录音转文字的Manager

其中录音部分的Manager需要用到@ohos.multimedia.audio中的audio(音频模块),@ohos.file.fs中的fs(文件模块),@kit.AbilityKit中的abilityAccessCtrl和common

然后定义录音管理器AudioManager:

最后,还有一个 requestPermission 方法用于请求录音权限,并且在权限获取后进行初始化。

最后导出AudioManager类

代码架构:

以上是第一大步:封装录音的Manager

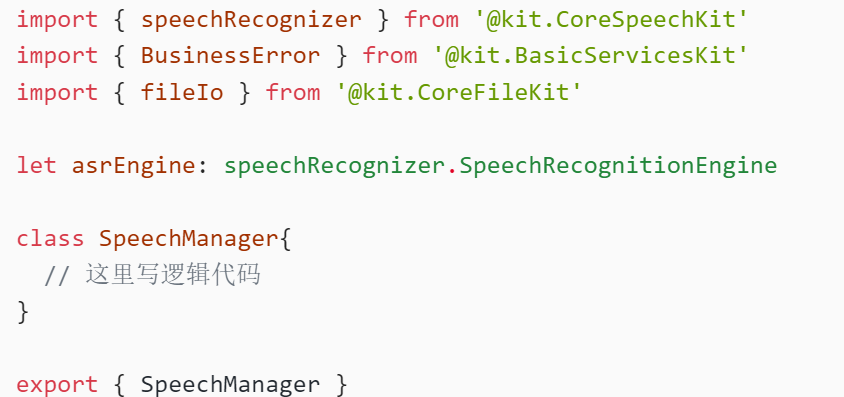

接下来就是第二大步:封装录音转文字的Manager

首先:

从 ‘@kit.CoreSpeechKit’ 导入speechRecognizer。

从 ‘@kit.BasicServicesKit’ 导入 BusinessError。

从 ‘@kit.CoreFileKit’ 导入 fileIo。

然后定义语音识别变量asrEngine

接下来定义语音管理类:SpeechManager

写完后导出录音转文字的Manager

代码结构:

这两个manager的核心配合的部分就是中间产生的沙箱路径filePath ,在录音的过程中我们把录好的声音存入此地址,等到转换的时候还去此地址中读取,从而实现转换

第一段manager的逻辑代码

private audioCapturer: audio.AudioCapturer | undefined = undefined// 存储录音的地址路径 private filePath:string = ‘’

private init() { let audioStreamInfo: audio.AudioStreamInfo = { samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_16000, channels: audio.AudioChannel.CHANNEL_1, sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE, encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW } let audioCapturerInfo: audio.AudioCapturerInfo = { source: audio.SourceType.SOURCE_TYPE_MIC, // 音源类型 capturerFlags: 0 // 音频采集器标志 } let audioCapturerOptions: audio.AudioCapturerOptions = { streamInfo: audioStreamInfo, capturerInfo: audioCapturerInfo } audio.createAudioCapturer(audioCapturerOptions, (err, capturer) => { // 创建AudioCapturer实例 if (err) { console.error(

Invoke createAudioCapturer failed, code is ${err.code}, message is ${err.message}); return; } console.info(create AudioCapturer success); this.audioCapturer = capturer; if (this.audioCapturer !== undefined) { (this.audioCapturer as audio.AudioCapturer).on(‘markReach’, 1000, (position: number) => { // 订阅markReach事件,当采集的帧数达到1000时触发回调 if (position === 1000) { console.info(‘ON Triggered successfully’) } }); (this.audioCapturer as audio.AudioCapturer).on(‘periodReach’, 2000, (position: number) => { // 订阅periodReach事件,当采集的帧数达到2000时触发回调 if (position === 2000) { console.info(‘ON Triggered successfully’) } }) } }) }// 开始录音 async start() { if (this.audioCapturer !== undefined) { let stateGroup = [audio.AudioState.STATE_PREPARED, audio.AudioState.STATE_PAUSED, audio.AudioState.STATE_STOPPED] if (stateGroup.indexOf((this.audioCapturer as audio.AudioCapturer).state.valueOf()) === -1) { // 当且仅当状态为STATE_PREPARED、STATE_PAUSED和STATE_STOPPED之一时才能启动采集 console.error(

: start failed); return; } await (this.audioCapturer as audio.AudioCapturer).start(); // 启动采集 let currentWavName =${<span class="hljs-built_in">Date</span>.now()}.wavthis.filePath = getContext(this).filesDir +/${currentWavName}; // 采集到的音频文件存储路径 let file: fs.File = fs.openSync(this.filePath, fs.OpenMode.READ_WRITE | fs.OpenMode.CREATE); // 如果文件不存在则创建文件 let fd = file.fd;<span class="hljs-keyword">let</span> numBuffersToCapture = <span class="hljs-number">150</span>; <span class="hljs-comment">// 循环写入150次</span> <span class="hljs-keyword">let</span> count = <span class="hljs-number">0</span>; <span class="hljs-keyword">class</span> Options { offset: number = <span class="hljs-number">0</span>; length: number = <span class="hljs-number">0</span> } <span class="hljs-keyword">while</span> (numBuffersToCapture) { <span class="hljs-keyword">let</span> bufferSize = await (<span class="hljs-keyword">this</span>.audioCapturer as audio.AudioCapturer).getBufferSize(); <span class="hljs-keyword">let</span> buffer = await (<span class="hljs-keyword">this</span>.audioCapturer as audio.AudioCapturer).read(bufferSize, <span class="hljs-literal">true</span>); <span class="hljs-keyword">let</span> options: Options = { offset: count * bufferSize, length: bufferSize }; <span class="hljs-keyword">if</span> (buffer === <span class="hljs-literal">undefined</span>) { console.error(`read buffer failed`); } <span class="hljs-keyword">else</span> { <span class="hljs-keyword">let</span> number = fs.writeSync(fd, buffer, options); console.info(`write date: ${number}`, buffer.byteLength.toString()); } numBuffersToCapture--; count++; } fs.closeSync(file) }}

// 停止录音 async stop():Promise<string | void> { if (this.audioCapturer !== undefined) { // 只有采集器状态为STATE_RUNNING或STATE_PAUSED的时候才可以停止 if ((this.audioCapturer as audio.AudioCapturer).state.valueOf() !== audio.AudioState.STATE_RUNNING && (this.audioCapturer as audio.AudioCapturer).state.valueOf() !== audio.AudioState.STATE_PAUSED) { return } try { await (this.audioCapturer as audio.AudioCapturer).stop() // 停止采集 } catch (e) {

} <span class="hljs-keyword">if</span> ((<span class="hljs-keyword">this</span>.audioCapturer as audio.AudioCapturer).state.valueOf() === audio.AudioState.STATE_STOPPED) { console.info(<span class="hljs-string">'Capturer stopped'</span>) } <span class="hljs-keyword">else</span> { console.error(<span class="hljs-string">'Capturer stop failed'</span>) } <span class="hljs-keyword">return</span> <span class="hljs-keyword">this</span>.filePath }}

// 销毁实例,释放资源 async release() { if (this.audioCapturer !== undefined) { // 采集器状态不是STATE_RELEASED或STATE_NEW状态,才能release if ((this.audioCapturer as audio.AudioCapturer).state.valueOf() === audio.AudioState.STATE_RELEASED || (this.audioCapturer as audio.AudioCapturer).state.valueOf() === audio.AudioState.STATE_NEW) { console.info(‘Capturer already released’) return } await (this.audioCapturer as audio.AudioCapturer).release() // 释放资源 if ((this.audioCapturer as audio.AudioCapturer).state.valueOf() === audio.AudioState.STATE_RELEASED) { console.info(‘Capturer released’) } else { console.error(‘Capturer release failed’) } } }

// 请求录音权限 requestPermission(){ let atManager = abilityAccessCtrl.createAtManager() let context: Context = getContext(this) as common.UIAbilityContext atManager.requestPermissionsFromUser(context, [ “ohos.permission.MICROPHONE”, ]).then(() => { this.init() }) }<button style="position: absolute; padding: 4px 8px 0px; cursor: pointer; top: 8px; right: 8px; font-size: 14px;">复制</button>

第二段manager的逻辑代码

private sessionId: string = “123456” // 创建引擎,通过callback形式返回 private createByCallback() { // 设置创建引擎参数 let extraParam: Record<string, Object> = { “locate”: “CN”, “recognizerMode”: “short” }; let initParamsInfo: speechRecognizer.CreateEngineParams = { language: ‘zh-CN’, online: 1, extraParams: extraParam }<span class="hljs-comment">// 调用createEngine方法</span> speechRecognizer.createEngine(initParamsInfo, (err: BusinessError, speechRecognitionEngine: speechRecognizer.SpeechRecognitionEngine) => { <span class="hljs-keyword">if</span> (!err) { console.info(<span class="hljs-string">'createEngine is succeeded'</span>); <span class="hljs-comment">// 接收创建引擎的实例</span> asrEngine = speechRecognitionEngine; } <span class="hljs-keyword">else</span> { <span class="hljs-comment">// 无法创建引擎时返回错误码1002200001,原因:语种不支持、模式不支持、初始化超时、资源不存在等导致创建引擎失败</span> <span class="hljs-comment">// 无法创建引擎时返回错误码1002200006,原因:引擎正在忙碌中,一般多个应用同时调用语音识别引擎时触发</span> <span class="hljs-comment">// 无法创建引擎时返回错误码1002200008,原因:引擎正在销毁中</span> console.error(<span class="hljs-string">"errCode: "</span> + err.code + <span class="hljs-string">" errMessage: "</span> + <span class="hljs-built_in">JSON</span>.stringify(err.message)); } })}

// 设置回调 private setListener(cb:(result:speechRecognizer.SpeechRecognitionResult)=>void) { // 创建回调对象 let setListener: speechRecognizer.RecognitionListener = { // 开始识别成功回调 onStart(sessionId: string, eventMessage: string) { console.info("onStart sessionId: " + sessionId + "eventMessage: " + eventMessage); }, // 事件回调 onEvent(sessionId: string, eventCode: number, eventMessage: string) { console.info("onEvent sessionId: " + sessionId + "eventCode: " + eventCode + "eventMessage: " + eventMessage); }, // 识别结果回调,包括中间结果和最终结果 onResult(sessionId: string, result: speechRecognizer.SpeechRecognitionResult) { console.info("onResult sessionId: " + sessionId + "sessionId: " + JSON.stringify(result)) // that.keyword = result.result // that.pressState = 1 cb && cb(result) }, //识别完成回调 onComplete(sessionId: string, eventMessage: string) { console.info("onComplete sessionId: " + sessionId + "eventMessage: " + eventMessage); }, // 错误回调,错误码通过本方法返回 // 如:返回错误码1002200006,识别引擎正忙,引擎正在识别中 // 更多错误码请参考错误码参考 onError(sessionId: string, errorCode: number, errorMessage: string) { console.error("onError sessionId: " + sessionId + "errorCode: " + errorCode + "errorMessage: " + errorMessage); }, } // 设置回调 asrEngine.setListener(setListener) }

// 开始识别 private startListening() { // 设置开始识别的相关参数 let recognizerParams: speechRecognizer.StartParams = { sessionId: this.sessionId, audioInfo: { audioType: ‘pcm’, sampleRate: 16000, soundChannel: 1, sampleBit: 16 } } // 调用开始识别方法 asrEngine.startListening(recognizerParams) }

// 计时 private async countDownLatch(count: number) { while (count > 0) { await this.sleep(40) count– } }

// 睡眠 private sleep(ms: number): Promise<void> { return new Promise(resolve => setTimeout(resolve, ms)) }

// 写音频流 private async writeAudio(filePath:string) { let ctx = getContext(this) let filenames: string[] = fileIo.listFileSync(ctx.filesDir) if (filenames.length <= 0) { return } // 根据路径地址把录音阶段的存入的音频文件取到 let file = fileIo.openSync(filePath, fileIo.OpenMode.READ_WRITE) try { let buf: ArrayBuffer = new ArrayBuffer(1280); let offset: number = 0; while (1280 == fileIo.readSync(file.fd, buf, { offset: offset })) { let unit8Array: Uint8Array = new Uint8Array(buf) asrEngine.writeAudio(this.sessionId, unit8Array) await this.countDownLatch(1) offset = offset + 1280 } } catch (e) { console.error("read file error " + e); } finally { if (null != file) { fileIo.closeSync(file) } } }

// 开始函数 start(filePath:string, cb:(result:speechRecognizer.SpeechRecognitionResult)=>void){ this.createByCallback() setTimeout(()=>{ this.setListener(cb) this.startListening() this.writeAudio(filePath) },1000) }<button style="position: absolute; padding: 4px 8px 0px; cursor: pointer; top: 8px; right: 8px; font-size: 14px;">复制</button>

关于【干货】HarmonyOS 鸿蒙Next我在鸿蒙next中是怎么完成录音功能+录音转文字的?的问题,您也可以访问:https://www.itying.com/category-93-b0.html 联系官网客服。

更多关于【干货】HarmonyOS 鸿蒙Next我在鸿蒙next中是怎么完成录音功能+录音转文字的?的实战系列教程也可以访问 https://www.itying.com/category-93-b0.html

找HarmonyOS工作还需要会Flutter的哦,有需要Flutter教程的可以学学大地老师的教程,很不错,B站免费学的哦:https://www.bilibili.com/video/BV1S4411E7LY/?p=17

感谢分享

希望HarmonyOS能继续优化系统稳定性,减少崩溃和重启的情况。

你笑死你yeye我了,我分享git和对应的api给你算可以了,你要是要里面的代码内容的话,你好好说我还会给你,你在这里装什么呢?

看不懂代表你fw,需要多练

gie你有分享?api需要你分享?都急了,笑死了

求

第一段manager的逻辑代码: private audioCapturer: audio.AudioCapturer | undefined = undefined

// 存储录音的地址路径 private filePath:string = ‘’

private init() {

let audioStreamInfo: audio.AudioStreamInfo = {

samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_16000,

channels: audio.AudioChannel.CHANNEL_1,

sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE,

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW

}

let audioCapturerInfo: audio.AudioCapturerInfo = {

source: audio.SourceType.SOURCE_TYPE_MIC, // 音源类型

capturerFlags: 0 // 音频采集器标志

}

let audioCapturerOptions: audio.AudioCapturerOptions = {

streamInfo: audioStreamInfo,

capturerInfo: audioCapturerInfo

}

audio.createAudioCapturer(audioCapturerOptions, (err, capturer) => { // 创建AudioCapturer实例

if (err) {

console.error(Invoke createAudioCapturer failed, code is ${err.code}, message is ${err.message});

return;

}

console.info(create AudioCapturer success);

this.audioCapturer = capturer;

if (this.audioCapturer !== undefined) {

(this.audioCapturer as audio.AudioCapturer).on(‘markReach’, 1000, (position: number) => { // 订阅markReach事件,当采集的帧数达到1000时触发回调

if (position === 1000) {

console.info(‘ON Triggered successfully’)

}

});

(this.audioCapturer as audio.AudioCapturer).on(‘periodReach’, 2000, (position: number) => { // 订阅periodReach事件,当采集的帧数达到2000时触发回调

if (position === 2000) {

console.info(‘ON Triggered successfully’)

}

})

}

})

}

// 开始录音

async start() {

if (this.audioCapturer !== undefined) {

let stateGroup = [audio.AudioState.STATE_PREPARED, audio.AudioState.STATE_PAUSED, audio.AudioState.STATE_STOPPED]

if (stateGroup.indexOf((this.audioCapturer as audio.AudioCapturer).state.valueOf()) === -1) { // 当且仅当状态为STATE_PREPARED、STATE_PAUSED和STATE_STOPPED之一时才能启动采集

console.error(: start failed);

return;

}

await (this.audioCapturer as audio.AudioCapturer).start(); // 启动采集

let currentWavName = ${Date.now()}.wav

this.filePath = getContext(this).filesDir + /${currentWavName}; // 采集到的音频文件存储路径

let file: fs.File = fs.openSync(this.filePath, fs.OpenMode.READ_WRITE | fs.OpenMode.CREATE); // 如果文件不存在则创建文件

let fd = file.fd;

let numBuffersToCapture = 150; // 循环写入150次

let count = 0;

class Options {

offset: number = 0;

length: number = 0

}

while (numBuffersToCapture) {

let bufferSize = await (this.audioCapturer as audio.AudioCapturer).getBufferSize();

let buffer = await (this.audioCapturer as audio.AudioCapturer).read(bufferSize, true);

let options: Options = {

offset: count * bufferSize,

length: bufferSize

};

if (buffer === undefined) {

console.error(`read buffer failed`);

} else {

let number = fs.writeSync(fd, buffer, options);

console.info(`write date: ${number}`, buffer.byteLength.toString());

}

numBuffersToCapture--;

count++;

}

fs.closeSync(file)

}}

// 停止录音 async stop():Promise<string | void> { if (this.audioCapturer !== undefined) { // 只有采集器状态为STATE_RUNNING或STATE_PAUSED的时候才可以停止 if ((this.audioCapturer as audio.AudioCapturer).state.valueOf() !== audio.AudioState.STATE_RUNNING && (this.audioCapturer as audio.AudioCapturer).state.valueOf() !== audio.AudioState.STATE_PAUSED) { return } try { await (this.audioCapturer as audio.AudioCapturer).stop() // 停止采集 } catch (e) {

}

if ((this.audioCapturer as audio.AudioCapturer).state.valueOf() === audio.AudioState.STATE_STOPPED) {

console.info('Capturer stopped')

} else {

console.error('Capturer stop failed')

}

return this.filePath

}}

// 销毁实例,释放资源 async release() { if (this.audioCapturer !== undefined) { // 采集器状态不是STATE_RELEASED或STATE_NEW状态,才能release if ((this.audioCapturer as audio.AudioCapturer).state.valueOf() === audio.AudioState.STATE_RELEASED || (this.audioCapturer as audio.AudioCapturer).state.valueOf() === audio.AudioState.STATE_NEW) { console.info(‘Capturer already released’) return } await (this.audioCapturer as audio.AudioCapturer).release() // 释放资源 if ((this.audioCapturer as audio.AudioCapturer).state.valueOf() === audio.AudioState.STATE_RELEASED) { console.info(‘Capturer released’) } else { console.error(‘Capturer release failed’) } } }

// 请求录音权限 requestPermission(){ let atManager = abilityAccessCtrl.createAtManager() let context: Context = getContext(this) as common.UIAbilityContext atManager.requestPermissionsFromUser(context, [ “ohos.permission.MICROPHONE”, ]).then(() => { this.init() }) }

第二段manager的逻辑代码: private sessionId: string = “123456” // 创建引擎,通过callback形式返回 private createByCallback() { // 设置创建引擎参数 let extraParam: Record<string, Object> = { “locate”: “CN”, “recognizerMode”: “short” }; let initParamsInfo: speechRecognizer.CreateEngineParams = { language: ‘zh-CN’, online: 1, extraParams: extraParam }

// 调用createEngine方法

speechRecognizer.createEngine(initParamsInfo, (err: BusinessError, speechRecognitionEngine:

speechRecognizer.SpeechRecognitionEngine) => {

if (!err) {

console.info('createEngine is succeeded');

// 接收创建引擎的实例

asrEngine = speechRecognitionEngine;

} else {

// 无法创建引擎时返回错误码1002200001,原因:语种不支持、模式不支持、初始化超时、资源不存在等导致创建引擎失败

// 无法创建引擎时返回错误码1002200006,原因:引擎正在忙碌中,一般多个应用同时调用语音识别引擎时触发

// 无法创建引擎时返回错误码1002200008,原因:引擎正在销毁中

console.error("errCode: " + err.code + " errMessage: " + JSON.stringify(err.message));

}

})}

// 设置回调 private setListener(cb:(result:speechRecognizer.SpeechRecognitionResult)=>void) { // 创建回调对象 let setListener: speechRecognizer.RecognitionListener = { // 开始识别成功回调 onStart(sessionId: string, eventMessage: string) { console.info("onStart sessionId: " + sessionId + "eventMessage: " + eventMessage); }, // 事件回调 onEvent(sessionId: string, eventCode: number, eventMessage: string) { console.info("onEvent sessionId: " + sessionId + "eventCode: " + eventCode + "eventMessage: " + eventMessage); }, // 识别结果回调,包括中间结果和最终结果 onResult(sessionId: string, result: speechRecognizer.SpeechRecognitionResult) { console.info("onResult sessionId: " + sessionId + "sessionId: " + JSON.stringify(result)) // that.keyword = result.result // that.pressState = 1 cb && cb(result) }, //识别完成回调 onComplete(sessionId: string, eventMessage: string) { console.info("onComplete sessionId: " + sessionId + "eventMessage: " + eventMessage); }, // 错误回调,错误码通过本方法返回 // 如:返回错误码1002200006,识别引擎正忙,引擎正在识别中 // 更多错误码请参考错误码参考 onError(sessionId: string, errorCode: number, errorMessage: string) { console.error("onError sessionId: " + sessionId + "errorCode: " + errorCode + "errorMessage: " + errorMessage); }, } // 设置回调 asrEngine.setListener(setListener) }

// 开始识别 private startListening() { // 设置开始识别的相关参数 let recognizerParams: speechRecognizer.StartParams = { sessionId: this.sessionId, audioInfo: { audioType: ‘pcm’, sampleRate: 16000, soundChannel: 1, sampleBit: 16 } } // 调用开始识别方法 asrEngine.startListening(recognizerParams) }

// 计时 private async countDownLatch(count: number) { while (count > 0) { await this.sleep(40) count– } }

// 睡眠 private sleep(ms: number): Promise<void> { return new Promise(resolve => setTimeout(resolve, ms)) }

// 写音频流 private async writeAudio(filePath:string) { let ctx = getContext(this) let filenames: string[] = fileIo.listFileSync(ctx.filesDir) if (filenames.length <= 0) { return } // 根据路径地址把录音阶段的存入的音频文件取到 let file = fileIo.openSync(filePath, fileIo.OpenMode.READ_WRITE) try { let buf: ArrayBuffer = new ArrayBuffer(1280); let offset: number = 0; while (1280 == fileIo.readSync(file.fd, buf, { offset: offset })) { let unit8Array: Uint8Array = new Uint8Array(buf) asrEngine.writeAudio(this.sessionId, unit8Array) await this.countDownLatch(1) offset = offset + 1280 } } catch (e) { console.error("read file error " + e); } finally { if (null != file) { fileIo.closeSync(file) } } }

// 开始函数 start(filePath:string, cb:(result:speechRecognizer.SpeechRecognitionResult)=>void){ this.createByCallback() setTimeout(()=>{ this.setListener(cb) this.startListening() this.writeAudio(filePath) },1000) }

我也放在帖子里了,你也可以去看