HarmonyOS 鸿蒙Next应用如何获取音频流

HarmonyOS 鸿蒙Next应用如何获取音频流

import { audio } from ‘@kit.AudioKit’; let audioStreamInfo: audio.AudioStreamInfo = {

samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_44100,

channels: audio.AudioChannel.CHANNEL_2,

sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE,

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW

};

let audioCapturerInfo: audio.AudioCapturerInfo = {

source: audio.SourceType.SOURCE_TYPE_MIC,

capturerFlags: 0

};

let audioCapturerOptions: audio.AudioCapturerOptions = {

streamInfo: audioStreamInfo,

capturerInfo: audioCapturerInfo

}; async getAudioStreaming() {

// let audioCapturer: audio.AudioCapturer;

audio.createAudioCapturer(audioCapturerOptions, (err, data) => {

if (err) {

console.error(AudioCapturer Created : Error: ${err});

} else {

console.info(‘AudioCapturer Created : Success : SUCCESS’);

let audioCapturer = data;

}

});

} 根据官网案例获取音频采集器报错 AudioCapturer Created : Error: Error: system error

更多关于HarmonyOS 鸿蒙Next应用如何获取音频流的实战系列教程也可以访问 https://www.itying.com/category-93-b0.html

1、检查下是否有加上麦克风权限:

在module.json5文件中添加:

"requestPermissions": [

{

"name": 'ohos.permission.MICROPHONE',

"reason": "$string:EntryAbility_desc",

"usedScene": {

"abilities": [

"EntryAbility"

]

}

}

] 2、检查下是否在刚开始的时候就请求权限:

在onWindowStageCreate中添加:

let atManager = abilityAccessCtrl.createAtManager();

atManager.requestPermissionsFromUser(this.context, ['ohos.permission.MICROPHONE']).then((data) => {

console.info('data:' + JSON.stringify(data));

console.info('data permissions:' + data.permissions);

console.info('data authResults:' + data.authResults);

}).catch((err: string) => {

console.info('data:' + JSON.stringify(err));

}); 更多关于HarmonyOS 鸿蒙Next应用如何获取音频流的实战系列教程也可以访问 https://www.itying.com/category-93-b0.html

应该是没有申请权限,需要在module.json5中添加全:

"requestPermissions": [

{

"name": "ohos.permission.MICROPHONE",

"reason": "$string:MICROPHONE_REASON",

"usedScene": {

"abilities": [

"EntryAbility"

],

"when": "always"

}

}

]

然后下面示例代码可以参考下:

index.ets:

import { BusinessError } from '[@kit](/user/kit).BasicServicesKit';

import { abilityAccessCtrl, Permissions, common } from '[@kit](/user/kit).AbilityKit';

import promptAction from '[@ohos](/user/ohos).promptAction';

import { checkPermissions } from '../utils/Permission';

import { audio } from '[@kit](/user/kit).AudioKit';

// 需要动态申请的权限

const permissions: Array<Permissions> = ['ohos.permission.MICROPHONE'];

// 获取程序的上下文

const context = getContext(this) as common.UIAbilityContext;

let audioCapturer: audio.AudioCapturer | undefined = undefined;

let audioStreamInfo: audio.AudioStreamInfo = {

samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_48000, // 采样率

channels: audio.AudioChannel.CHANNEL_2, // 通道

sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE, // 采样格式

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW // 编码格式

};

let audioCapturerInfo: audio.AudioCapturerInfo = {

source: audio.SourceType.SOURCE_TYPE_MIC, // 音源类型

capturerFlags: 0 // 音频采集器标志

};

let audioCapturerOptions: audio.AudioCapturerOptions = {

streamInfo: audioStreamInfo,

capturerInfo: audioCapturerInfo

};

let readDataCallback = (buffer: ArrayBuffer) => {

const view = new Int16Array(buffer);

console.log('【AudioCapturer】readDataCallback: ')

};

let audioManager = audio.getAudioManager(); // 需要先创建AudioManager实例

let audioRoutingManager = audioManager.getRoutingManager(); // 再调用AudioManager的方法创建AudioRoutingManager实例

// 初始化,创建实例,设置监听事件

function init() {

audioRoutingManager.getDevices(audio.DeviceFlag.INPUT_DEVICES_FLAG).then((data: audio.AudioDeviceDescriptors) => {

console.info('【AudioCapturer】device list');

data.forEach(v => {

// 1 15 输入设备 麦克风类型

console.info(`【AudioCapturer】deviceRole: ${v.deviceRole}, deviceType: ${v.deviceType}, id: ${v.id}, name: ${v.name}`);

console.info(`【AudioCapturer】address: ${v.address}, sampleRates: ${v.sampleRates}, channelCounts: ${v.channelCounts}, channelMasks: ${v.channelMasks}`);

console.info(`【AudioCapturer】displayName: ${v.displayName}, encodingTypes: ${v.encodingTypes}`);

})

});

audio.createAudioCapturer(audioCapturerOptions, (err, capturer) => { // 创建AudioCapturer实例

if (err) {

console.error(`【AudioCapturer】Invoke createAudioCapturer failed, code is ${err.code}, message is ${err.message}`);

return;

}

console.info(`【AudioCapturer】: create AudioCapturer success`);

audioCapturer = capturer;

if (audioCapturer !== undefined) {

(audioCapturer as audio.AudioCapturer).on('readData', readDataCallback);

}

});

}

// 开始一次音频采集

function start() {

if (audioCapturer !== undefined) {

let stateGroup = [audio.AudioState.STATE_PREPARED, audio.AudioState.STATE_PAUSED, audio.AudioState.STATE_STOPPED];

if (stateGroup.indexOf((audioCapturer as audio.AudioCapturer).state.valueOf()) === -1) { // 当且仅当状态为STATE_PREPARED、STATE_PAUSED和STATE_STOPPED之一时才能启动采集

console.error(`【AudioCapturer】: start failed`);

return;

}

// 启动采集

(audioCapturer as audio.AudioCapturer).start((err: BusinessError) => {

if (err) {

console.error('【AudioCapturer】Capturer start failed.');

} else {

console.info('【AudioCapturer】Capturer start success.');

}

});

}

}

// 停止采集

function stop() {

if (audioCapturer !== undefined) {

// 只有采集器状态为STATE_RUNNING或STATE_PAUSED的时候才可以停止

if ((audioCapturer as audio.AudioCapturer).state.valueOf() !== audio.AudioState.STATE_RUNNING && (audioCapturer as audio.AudioCapturer).state.valueOf() !== audio.AudioState.STATE_PAUSED) {

console.info('【AudioCapturer】Capturer is not running or paused');

return;

}

//停止采集

(audioCapturer as audio.AudioCapturer).stop((err: BusinessError) => {

if (err) {

console.error('【AudioCapturer】Capturer stop failed.');

} else {

console.info('【AudioCapturer】Capturer stop success.');

}

});

}

}

// 销毁实例,释放资源

function release() {

if (audioCapturer !== undefined) {

// 采集器状态不是STATE_RELEASED或STATE_NEW状态,才能release

if ((audioCapturer as audio.AudioCapturer).state.valueOf() === audio.AudioState.STATE_RELEASED || (audioCapturer as audio.AudioCapturer).state.valueOf() === audio.AudioState.STATE_NEW) {

console.info('【AudioCapturer】Capturer already released');

return;

}

//释放资源

(audioCapturer as audio.AudioCapturer).release((err: BusinessError) => {

if (err) {

console.error('【AudioCapturer】Capturer release failed.');

} else {

console.info('【AudioCapturer】Capturer release success.');

}

});

}

}

[@Entry](/user/Entry)

[@Component](/user/Component)

struct Index {

build() {

Column() {

Button('初始化录音器').onClick((event: ClickEvent) => {

checkPermissions().then((res) => {

if(res) {

console.info('【AudioCapturer】有权限,可以录音')

init()

} else {

console.info('【AudioCapturer】申请权限')

this.reqPermissionsFromUser(permissions)

}

})

}).margin({ bottom: 30 })

Button('开始录制').onClick((event: ClickEvent) => {

start();

}).margin({ bottom: 30 })

Button('停止录制').onClick((event: ClickEvent) => {

stop();

}).margin({ bottom: 30 })

Button('销毁录音器').onClick((event: ClickEvent) => {

release();

})

}.width('100%').height('100%').justifyContent(FlexAlign.Center)

}

reqPermissionsFromUser(permissions: Array<Permissions>): void {

let atManager: abilityAccessCtrl.AtManager = abilityAccessCtrl.createAtManager();

// requestPermissionsFromUser会判断权限的授权状态来决定是否唤起弹窗

atManager.requestPermissionsFromUser(context, permissions).then((data) => {

let grantStatus: Array<number> = data.authResults;

let length: number = grantStatus.length;

for (let i = 0; i < length; i++) {

if (grantStatus[i] === 0) {

// 用户授权,可以继续访问目标操作

console.info('授权成功')

if (audioCapturer == null) {

init();

}

} else {

// 用户拒绝授权,提示用户必须授权才能访问当前页面的功能,并引导用户到系统设置中打开相应的权限

promptAction.showToast({ message: '授权失败,需要授权才能录音' })

return;

}

}

// 授权成功

}).catch((err: BusinessError) => {

console.error(`Failed to request permissions from user. Code is ${err.code}, message is ${err.message}`);

})

}

} Permission.ets

import { abilityAccessCtrl, bundleManager, Permissions } from '[@kit](/user/kit).AbilityKit';

import { BusinessError } from '[@kit](/user/kit).BasicServicesKit';

const permissions: Array<Permissions> = ['ohos.permission.MICROPHONE'];

async function checkPermissionGrant(permission: Permissions): Promise<abilityAccessCtrl.GrantStatus> {

let atManager: abilityAccessCtrl.AtManager = abilityAccessCtrl.createAtManager();

let grantStatus: abilityAccessCtrl.GrantStatus = abilityAccessCtrl.GrantStatus.PERMISSION_DENIED;

// 获取应用程序的accessTokenID

let tokenId: number = 0;

try {

let bundleInfo: bundleManager.BundleInfo = await bundleManager.getBundleInfoForSelf(bundleManager.BundleFlag.GET_BUNDLE_INFO_WITH_APPLICATION);

let appInfo: bundleManager.ApplicationInfo = bundleInfo.appInfo;

tokenId = appInfo.accessTokenId;

} catch (error) {

const err: BusinessError = error as BusinessError;

console.error(`Failed to get bundle info for self. Code is ${err.code}, message is ${err.message}`);

}

// 校验应用是否被授予权限

try {

grantStatus = await atManager.checkAccessToken(tokenId, permission);

} catch (error) {

const err: BusinessError = error as BusinessError;

console.error(`Failed to check access token. Code is ${err.code}, message is ${err.message}`);

}

return grantStatus;

}

export async function checkPermissions(): Promise<boolean> {

let grantStatus: abilityAccessCtrl.GrantStatus = await checkPermissionGrant(permissions[0]);

if (grantStatus === abilityAccessCtrl.GrantStatus.PERMISSION_GRANTED) {

// 已经授权,可以继续访问目标操作

return true;

} else {

// 申请麦克风权限

return false;

}

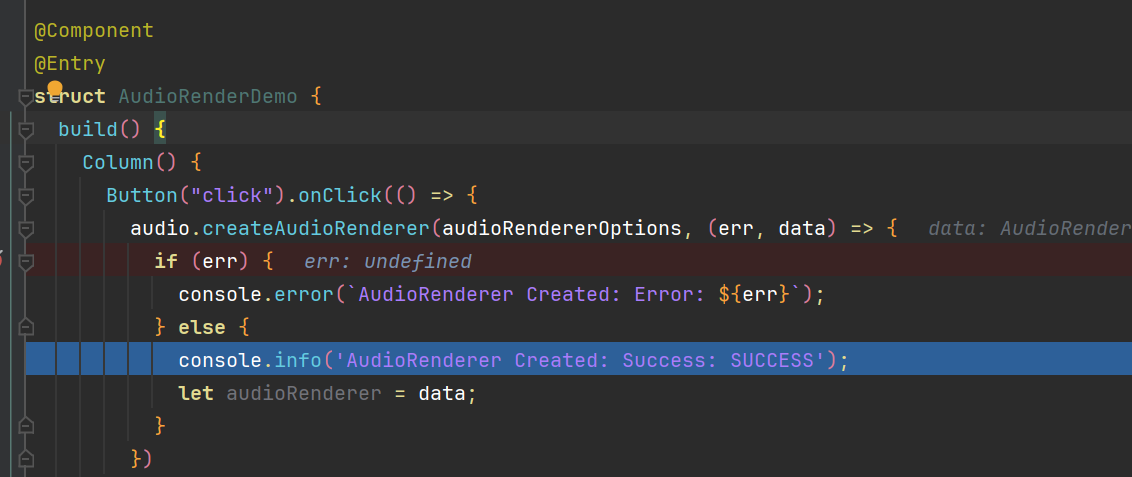

}import { audio } from '@kit.AudioKit';@Component @Entry struct AudioRenderDemo { build() { Column() { Button(“click”).onClick(() => { audio.createAudioRenderer(audioRendererOptions, (err, data) => { if (err) { console.error(

AudioRenderer Created: <span class="hljs-built_in"><span class="hljs-built_in">Error</span></span>: ${err}); } else { console.info(‘AudioRenderer Created: Success: SUCCESS’); let audioRenderer = data; } }) }) } } }let audioStreamInfo: audio.AudioStreamInfo = { samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_44100, channels: audio.AudioChannel.CHANNEL_1, sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE, encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW };

let audioRendererInfo: audio.AudioRendererInfo = { usage: audio.StreamUsage.STREAM_USAGE_VOICE_COMMUNICATION, rendererFlags: 0 };

let audioRendererOptions: audio.AudioRendererOptions = { streamInfo: audioStreamInfo, rendererInfo: audioRendererInfo };<button style="position: absolute; padding: 4px 8px 0px; cursor: pointer; top: 8px; right: 8px; font-size: 14px;">复制</button>

我本地跑了是正常的

在HarmonyOS鸿蒙Next应用中获取音频流,可以通过鸿蒙系统提供的音频采集接口来实现。以下是一些关键步骤和注意事项:

-

使用AudioCapture接口:

- 鸿蒙系统提供了TS与C++两种音频采集接口,你可以根据自己的开发需求选择合适的语言。

- 创建AudioCapturer实例,并配置音频采集参数,如采样率、声道数、采样格式和编码类型等。

- 调用start方法开始录制音频,并通过on(‘readData’)方法订阅音频数据读入回调,以获取音频流。

-

配置音频采集参数:

- 采样率、声道数、采样格式和编码类型等参数需要根据实际需求进行配置。

- 编码类型目前支持PCM的ENCODING_TYPE_RAW配置。

-

处理音频流:

- 在音频数据读入回调中,你可以对获取到的音频流进行处理,如编码、压缩、传输等。

如果在获取音频流的过程中遇到问题,请确保你已经正确配置了音频采集参数,并且AudioCapturer实例已经正确创建和启动。同时,检查代码中是否有错误或异常处理逻辑,以便及时定位和解决问题。

如果问题依旧没法解决请联系官网客服,官网地址是:https://www.itying.com/category-93-b0.html