HarmonyOS 鸿蒙Next 帧配置中设置人脸检测和对焦模式都没有反应

HarmonyOS 鸿蒙Next 帧配置中设置人脸检测和对焦模式都没有反应

预览画面中人脸检测没有出现检测框,控制台显示无结果

点击预览画面也没有对焦的框框出现

更多关于HarmonyOS 鸿蒙Next 帧配置中设置人脸检测和对焦模式都没有反应的实战教程也可以访问 https://www.itying.com/category-93-b0.html

检测如果有结果的话会返回坐标区域,这个框应该是开发者根据坐标自己绘制出来的

更多关于HarmonyOS 鸿蒙Next 帧配置中设置人脸检测和对焦模式都没有反应的实战系列教程也可以访问 https://www.itying.com/category-93-b0.html

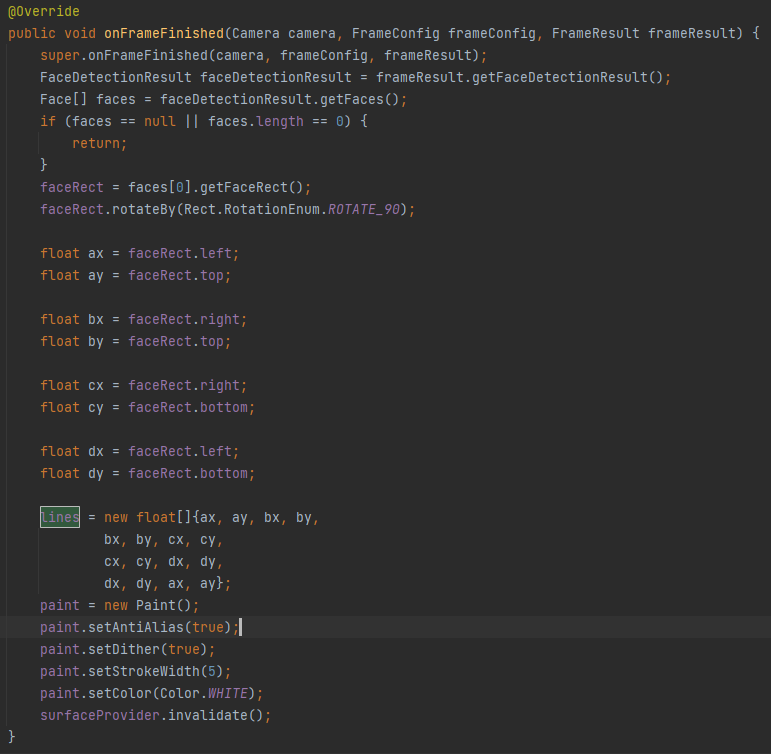

了解了,用frameResult获得了Face对象,Face对象里有Rect,那请问如何让这个rect显示出来呢。

HarmonyOS的分布式文件系统让我在多设备间传输文件变得轻松无比。

非常感谢😉!

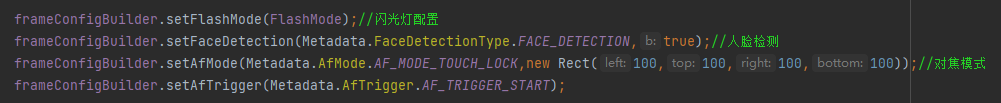

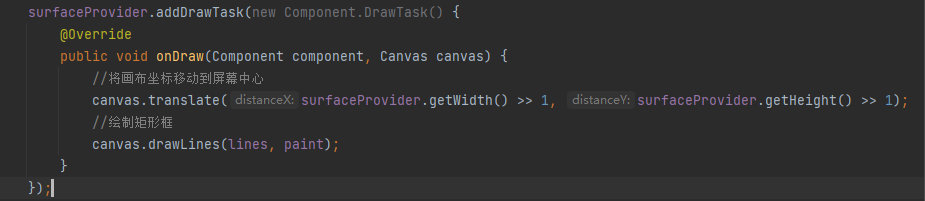

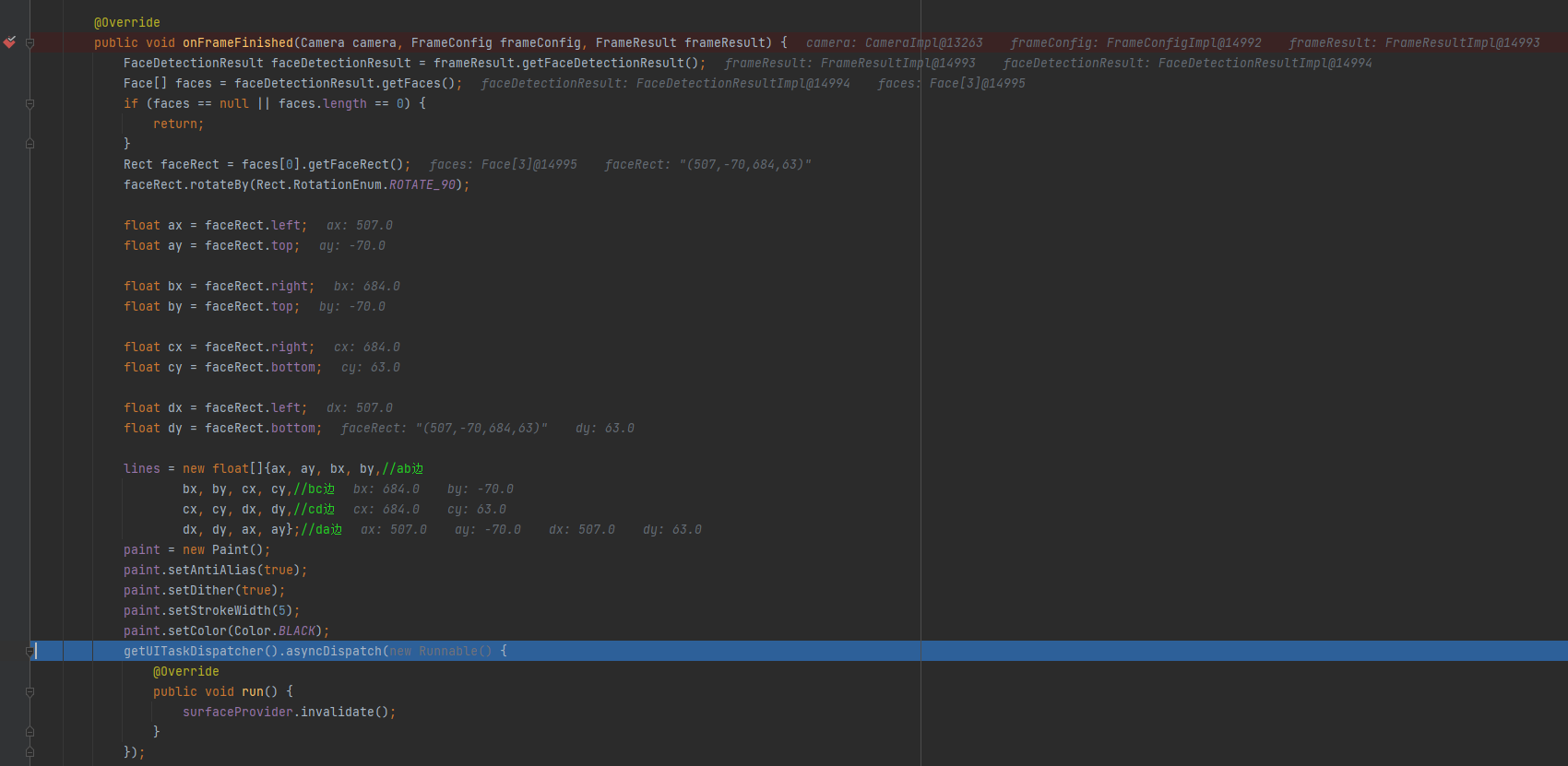

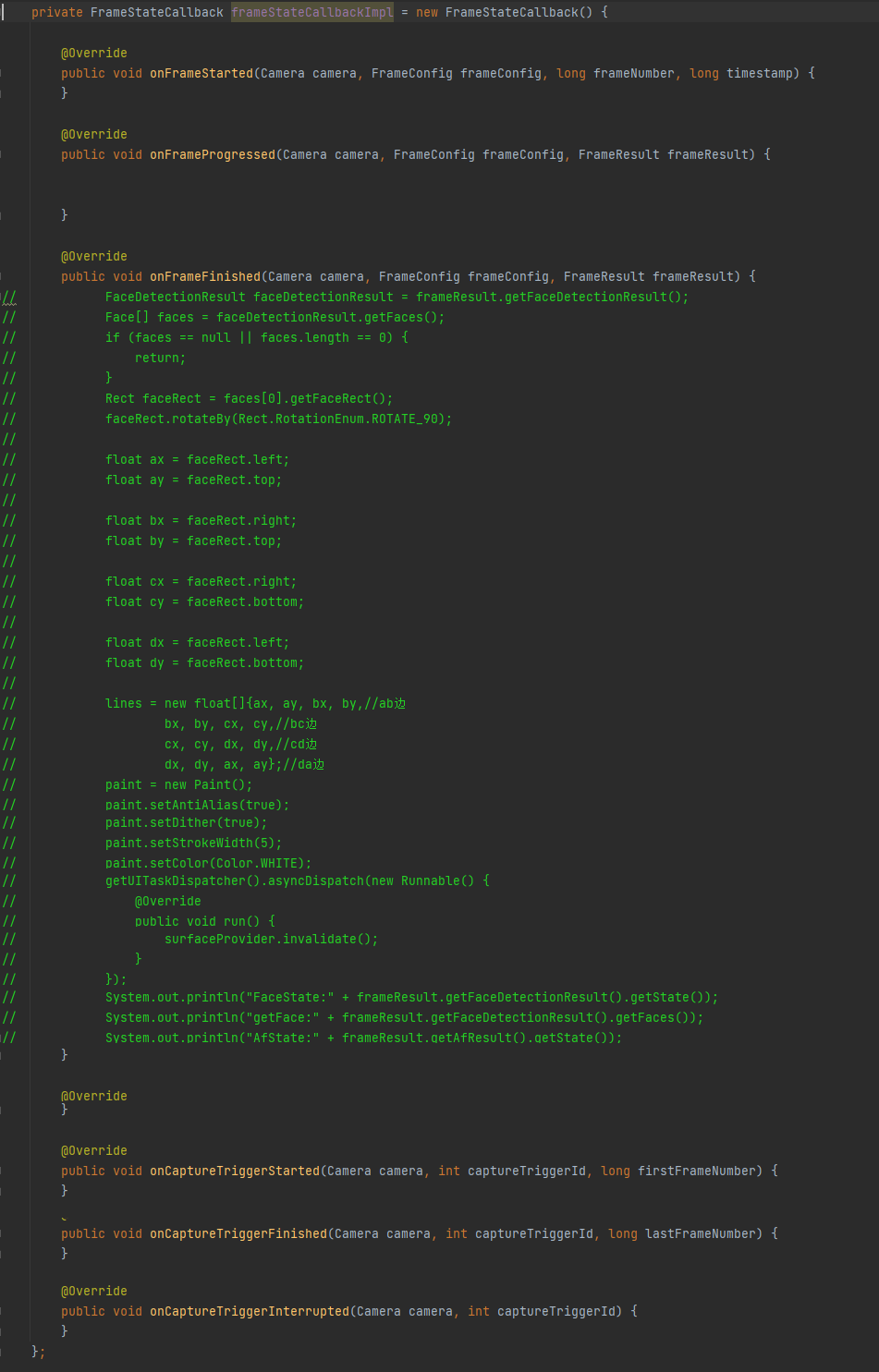

这是我在一个官方demo中添加了代码,是可以正常绘制识别框的,你把不需要的代码去掉就好

package com.huawei.camerademo.slice;

import static com.huawei.camerademo.utils.CameraUtil.CAMERA_IMAGE_FILE_PATH;

import static ohos.media.camera.device.Camera.FrameConfigType.FRAME_CONFIG_PICTURE;

import static ohos.media.camera.device.Camera.FrameConfigType.FRAME_CONFIG_PREVIEW;

import com.huawei.camerademo.ImageAbility;

import com.huawei.camerademo.ResourceTable;

import com.huawei.camerademo.listener.MyAsrListener;

import com.huawei.camerademo.utils.CameraUtil;

import com.huawei.camerademo.utils.DistributeFileUtil;

import com.huawei.camerademo.utils.MyDrawTask;

import com.huawei.camerademo.utils.PermissionBridge;

import com.alibaba.fastjson.JSONObject;

import ohos.aafwk.ability.AbilitySlice;

import ohos.aafwk.content.Intent;

import ohos.aafwk.content.Operation;

import ohos.agp.components.Component;

import ohos.agp.components.ComponentContainer;

import ohos.agp.components.DirectionalLayout;

import ohos.agp.components.Image;

import ohos.agp.components.surfaceprovider.SurfaceProvider;

import ohos.agp.graphics.Surface;

import ohos.agp.graphics.SurfaceOps;

import ohos.agp.render.Canvas;

import ohos.agp.render.Paint;

import ohos.agp.utils.Color;

import ohos.agp.utils.Rect;

import ohos.ai.asr.AsrClient;

import ohos.ai.asr.AsrIntent;

import ohos.ai.asr.AsrListener;

import ohos.ai.asr.util.AsrResultKey;

import ohos.app.Environment;

import ohos.app.dispatcher.TaskDispatcher;

import ohos.eventhandler.EventHandler;

import ohos.eventhandler.EventRunner;

import ohos.eventhandler.InnerEvent;

import ohos.hiviewdfx.HiLog;

import ohos.hiviewdfx.HiLogLabel;

import ohos.media.audio.AudioCapturer;

import ohos.media.audio.AudioCapturerInfo;

import ohos.media.audio.AudioStreamInfo;

import ohos.media.camera.CameraKit;

import ohos.media.camera.device.*;

import ohos.media.camera.params.Face;

import ohos.media.camera.params.FaceDetectionResult;

import ohos.media.camera.params.Metadata;

import ohos.media.image.ImageReceiver;

import ohos.media.image.common.ImageFormat;

import ohos.utils.PacMap;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

/**

* MainAbilitySlice

*

* @since 2021-03-08

*/

public class MainAbilitySlice extends AbilitySlice implements PermissionBridge.OnPermissionStateListener {

private static final HiLogLabel TAG = new HiLogLabel(3, 0xD001100, "MainAbilitySlice");

private static final int EVENT_IMAGESAVING_PROMTING = 0x0000024;

private static final int POOL_SIZE = 3;

private static final int ALIVE_TIME = 3;

private static final int CAPACITY = 6;

private static final int SLEEP_TIME = 200;

private static final int BYTES_LENGTH = 1280;

private static final int VAD_END_WAIT_MS = 2000;

private static final int VAD_FRONT_WAIT_MS = 4800;

private static final int SAMPLE_RATE = 16000;

private static final int TIMEOUT_DURATION = 20000;

private static final int SCREEN_WIDTH = 1080;

private static final int SCREEN_HEIGHT = 2340;

private static final int IMAGE_RCV_CAPACITY = 9;

private static final String IMG_FILE_PREFIX = "IMG_";

private static final String IMG_FILE_TYPE = ".png";

private static Map<String, Boolean> COMMAND_MAP = new HashMap<>();

private static AsrClient asrClient;

static {

COMMAND_MAP.put("拍照", true);

COMMAND_MAP.put("茄子", true);

}

private EventHandler handler = new EventHandler(EventRunner.current()) {

@Override

protected void processEvent(InnerEvent event) {

switch (event.eventId) {

case EVENT_IMAGESAVING_PROMTING:

HiLog.info(TAG, "EVENT_IMAGESAVING_PROMTING");

MyDrawTask drawTask = new MyDrawTask(smallImage);

smallImage.addDrawTask(drawTask);

drawTask.putPixelMap(CameraUtil.getPixelMap(bytes, "", 1));

smallImage.setEnabled(true);

break;

default:

break;

}

}

};

private Surface previewSurface;

private SurfaceProvider surfaceProvider;

private boolean isCameraRear;

private Camera cameraDevice;

private EventHandler creamEventHandler;

private ImageReceiver imageReceiver;

private Image takePictureImage;

private Image switchCameraImage;

private File targetFile;

private Image smallImage;

private byte[] bytes;

private boolean isRecord = false;

private ThreadPoolExecutor poolExecutor;

private AudioCapturer audioCapturer;

private boolean recognizeOver;

private String fileName;

private Rect faceRect;

private float[] lines;

private Paint paint;

@Override

public void onStart(Intent intent) {

HiLog.info(TAG, "onStart");

super.onStart(intent);

super.setUIContent(ResourceTable.Layout_ability_main);

new PermissionBridge().setOnPermissionStateListener(this);

}

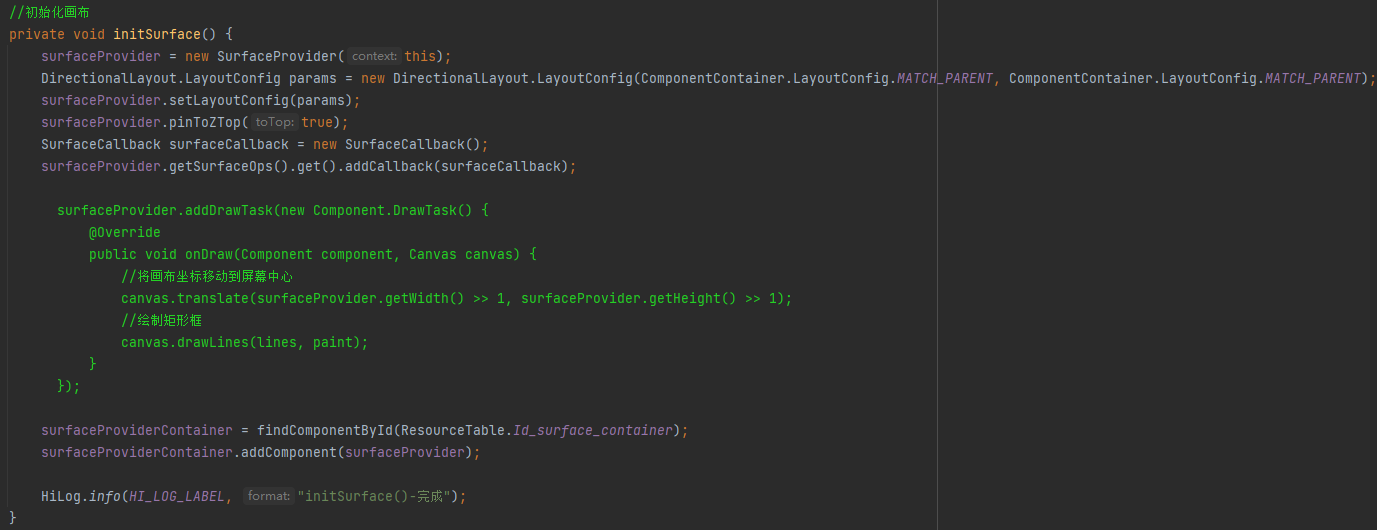

private void initSurface() {

paint = new Paint();

paint.setAntiAlias(true);

paint.setDither(true);

paint.setStrokeWidth(5);

paint.setColor(Color.WHITE);

getWindow().setTransparent(true);

surfaceProvider = new SurfaceProvider(this);

DirectionalLayout.LayoutConfig params =

new DirectionalLayout.LayoutConfig(

ComponentContainer.LayoutConfig.MATCH_PARENT, ComponentContainer.LayoutConfig.MATCH_PARENT);

surfaceProvider.setLayoutConfig(params);

surfaceProvider.pinToZTop(false);

surfaceProvider.addDrawTask(new Component.DrawTask() {

@Override

public void onDraw(Component component, Canvas canvas) {

if (lines != null && lines.length != 0) {

canvas.translate(surfaceProvider.getWidth() >> 1, surfaceProvider.getHeight() >> 1);

canvas.drawLines(lines, paint);

} else {

canvas.clipRect(faceRect);

}

}

});

surfaceProvider.getSurfaceOps().get().addCallback(new SurfaceCallBack());

Component surfaceContainer = findComponentById(ResourceTable.Id_surface_container);

if (surfaceContainer instanceof ComponentContainer) {

((ComponentContainer) surfaceContainer).addComponent(surfaceProvider);

}

}

private void initControlComponents() {

if (findComponentById(ResourceTable.Id_tack_picture_btn) instanceof Image) {

takePictureImage = (Image) findComponentById(ResourceTable.Id_tack_picture_btn);

}

takePictureImage.setClickedListener(this::takePicture);

if (findComponentById(ResourceTable.Id_switch_camera_btn) instanceof Image) {

switchCameraImage = (Image) findComponentById(ResourceTable.Id_switch_camera_btn);

MyDrawTask drawTask = new MyDrawTask(switchCameraImage);

switchCameraImage.addDrawTask(drawTask);

}

switchCameraImage.setClickedListener(component -> switchClicked());

if (findComponentById(ResourceTable.Id_small_pic) instanceof Image) {

smallImage = (Image) findComponentById(ResourceTable.Id_small_pic);

}

smallImage.setClickedListener(component -> showBigPic());

}

private void openAudio() {

HiLog.info(TAG, "openAudio");

if (!isRecord) {

asrClient.startListening(setStartIntent());

isRecord = true;

poolExecutor.submit(new AudioCaptureRunnable());

}

}

private void takePicture(Component component) {

HiLog.info(TAG, "takePicture");

if (!takePictureImage.isEnabled()) {

HiLog.info(TAG, "takePicture return");

return;

}

if (cameraDevice == null || imageReceiver == null) {

return;

}

FrameConfig.Builder framePictureConfigBuilder = cameraDevice.getFrameConfigBuilder(FRAME_CONFIG_PICTURE);

framePictureConfigBuilder.addSurface(imageReceiver.getRecevingSurface());

FrameConfig pictureFrameConfig = framePictureConfigBuilder.build();

cameraDevice.triggerSingleCapture(pictureFrameConfig);

}

private void switchClicked() {

if (!takePictureImage.isEnabled()) {

return;

}

takePictureImage.setEnabled(false);

isCameraRear = !isCameraRear;

CameraUtil.setIsCameraRear(isCameraRear);

openCamera();

}

private void openCamera() {

imageReceiver = ImageReceiver.create(SCREEN_WIDTH, SCREEN_HEIGHT, ImageFormat.JPEG, IMAGE_RCV_CAPACITY);

imageReceiver.setImageArrivalListener(this::saveImage);

CameraKit cameraKit = CameraKit.getInstance(getApplicationContext());

String[] cameraList = cameraKit.getCameraIds();

String cameraId = cameraList.length > 1 && isCameraRear ? cameraList[1] : cameraList[0];

CameraStateCallbackImpl cameraStateCallback = new CameraStateCallbackImpl();

cameraKit.createCamera(cameraId, cameraStateCallback, creamEventHandler);

}

private void saveImage(ImageReceiver receiver) {

fileName = IMG_FILE_PREFIX + System.currentTimeMillis() + IMG_FILE_TYPE;

targetFile = new File(getExternalFilesDir(Environment.DIRECTORY_PICTURES), fileName);

try {

HiLog.info(TAG, "filePath is " + targetFile.getCanonicalPath());

} catch (IOException e) {

HiLog.error(TAG, "filePath is error");

}

ohos.media.image.Image image = receiver.readNextImage();

if (image == null) {

return;

}

ohos.media.image.Image.Component component = image.getComponent(ImageFormat.ComponentType.JPEG);

bytes = new byte[component.remaining()];

component.read(bytes);

try (FileOutputStream output = new FileOutputStream(targetFile)) {

output.write(bytes);

output.flush();

handler.sendEvent(EVENT_IMAGESAVING_PROMTING);

DistributeFileUtil.copyPicToDistributedDir(MainAbilitySlice.this, targetFile, fileName);

} catch (IOException e) {

HiLog.info(TAG, "IOException, Save image failed");

}

}

private void releaseCamera() {

if (cameraDevice != null) {

cameraDevice.release();

cameraDevice = null;

}

if (imageReceiver != null) {

imageReceiver.release();

imageReceiver = null;

}

if (creamEventHandler != null) {

creamEventHandler.removeAllEvent();

creamEventHandler = null;

}

}

@Override

protected void onStop() {

super.onStop();

releaseCamera();

}

@Override

public void onPermissionGranted() {

getWindow().setTransparent(true);

initSurface();

initAudioCapturer();

initControlComponents();

initAsrClient();

creamEventHandler = new EventHandler(EventRunner.create("CameraBackground"));

}

private void initAudioCapturer() {

poolExecutor =

new ThreadPoolExecutor(

POOL_SIZE,

POOL_SIZE,

ALIVE_TIME,

TimeUnit.SECONDS,

new LinkedBlockingQueue<>(CAPACITY),

new ThreadPoolExecutor.DiscardOldestPolicy());

AudioStreamInfo audioStreamInfo =

new AudioStreamInfo.Builder()

.encodingFormat(AudioStreamInfo.EncodingFormat.ENCODING_PCM_16BIT)

.channelMask(AudioStreamInfo.ChannelMask.CHANNEL_IN_MONO)

.sampleRate(SAMPLE_RATE)

.build();

AudioCapturerInfo audioCapturerInfo = new AudioCapturerInfo.Builder().audioStreamInfo(audioStreamInfo).build();

audioCapturer = new AudioCapturer(audioCapturerInfo);

}

private boolean recognizeWords(String result) {

JSONObject jsonObject = JSONObject.parseObject(result);

JSONObject resultObject = new JSONObject();

if (jsonObject.getJSONArray("result").get(0) instanceof JSONObject) {

resultObject = (JSONObject) jsonObject.getJSONArray("result").get(0);

}

String resultWord = resultObject.getString("ori_word").replace(" ", "");

boolean command = COMMAND_MAP.getOrDefault(resultWord, false);

HiLog.info(TAG, "======" + resultWord + "===" + command);

return command;

}

private void initListener() {

AsrListener asrListener = new MyAsrListener() {

@Override

public void onInit(PacMap params) {

super.onInit(params);

openAudio();

HiLog.info(TAG, "======onInit======");

}

@Override

public void onError(int error) {

super.onError(error);

HiLog.info(TAG, "======error:" + error);

}

@Override

public void onIntermediateResults(PacMap pacMap) {

super.onIntermediateResults(pacMap);

HiLog.info(TAG, "======onIntermediateResults:");

String result = pacMap.getString(AsrResultKey.RESULTS_INTERMEDIATE);

boolean recognizeResult = recognizeWords(result);

if (recognizeResult && !recognizeOver) {

recognizeOver = true;

takePicture(new Component(getContext()));

asrClient.stopListening();

}

}

@Override

public void onResults(PacMap results) {

super.onResults(results);

HiLog.info(TAG, "======onResults:");

recognizeOver = false;

asrClient.startListening(setStartIntent());

}

@Override

public void onEnd() {

super.onEnd();

HiLog.info(TAG, "======onEnd:");

recognizeOver = false;

asrClient.stopListening();

asrClient.startListening(setStartIntent());

}

};

if (asrClient != null) {

asrClient.init(setInitIntent(), asrListener);

}

}

private void initAsrClient() {

asrClient = AsrClient.createAsrClient(this).orElse(null);

TaskDispatcher taskDispatcher = getAbility().getMainTaskDispatcher();

taskDispatcher.asyncDispatch(

new Runnable() {

@Override

public void run() {

initListener();

}

});

}

@Override

public void onPermissionDenied() {}

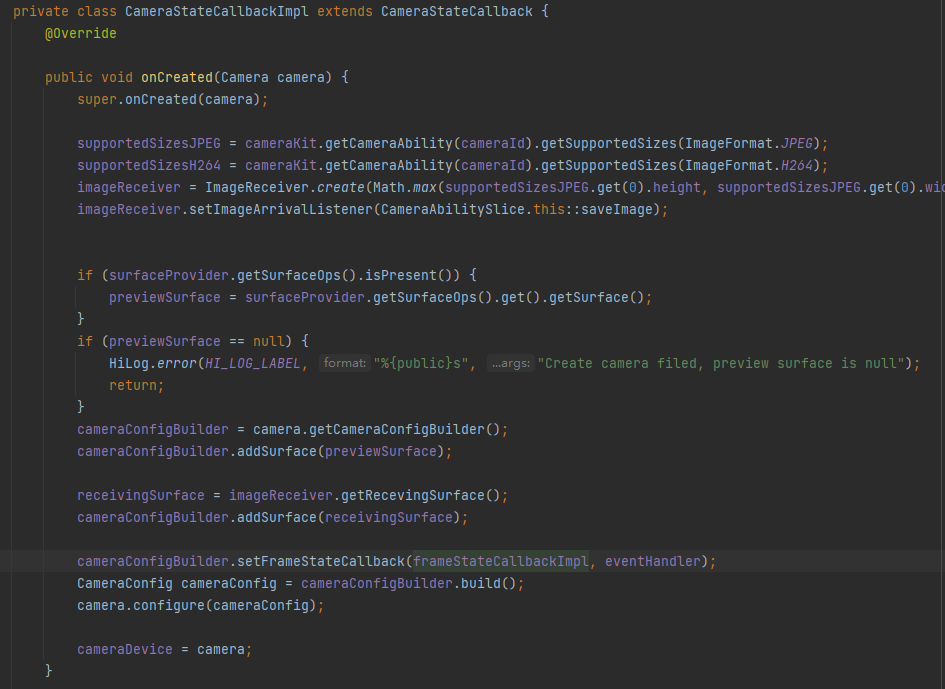

class CameraStateCallbackImpl extends CameraStateCallback {

CameraStateCallbackImpl() {}

@Override

public void onCreated(Camera camera) {

previewSurface = surfaceProvider.getSurfaceOps().get().getSurface();

if (previewSurface == null) {

HiLog.info(TAG, "create camera filed, preview surface is null");

return;

}

try {

Thread.sleep(SLEEP_TIME);

} catch (InterruptedException exception) {

HiLog.info(TAG, "Waiting to be interrupted");

}

CameraConfig.Builder cameraConfigBuilder = camera.getCameraConfigBuilder();

cameraConfigBuilder.addSurface(previewSurface);

cameraConfigBuilder.addSurface(imageReceiver.getRecevingSurface());

cameraConfigBuilder.setFrameStateCallback(new MyFrameStateCallback(), handler);

camera.configure(cameraConfigBuilder.build());

cameraDevice = camera;

enableImageGroup();

}

@Override

public void onConfigured(Camera camera) {

FrameConfig.Builder framePreviewConfigBuilder = camera.getFrameConfigBuilder(FRAME_CONFIG_PREVIEW);

framePreviewConfigBuilder.addSurface(previewSurface);

framePreviewConfigBuilder.setFaceDetection(Metadata.FaceDetectionType.FACE_DETECTION

| Metadata.FaceDetectionType.FACE_SMILE_DETECTION, true);

try {

camera.triggerLoopingCapture(framePreviewConfigBuilder.build());

} catch (IllegalArgumentException e) {

HiLog.error(TAG, "Argument Exception");

} catch (IllegalStateException e) {

HiLog.error(TAG, "State Exception");

}

}

private void enableImageGroup() {

takePictureImage.setEnabled(true);

switchCameraImage.setEnabled(true);

}

}

class MyFrameStateCallback extends FrameStateCallback {

public MyFrameStateCallback() {

super();

}

@Override

public void onFrameStarted(Camera camera, FrameConfig frameConfig, long frameNumber, long timestamp) {

super.onFrameStarted(camera, frameConfig, frameNumber, timestamp);

}

@Override

public void onFrameProgressed(Camera camera, FrameConfig frameConfig, FrameResult frameResult) {

super.onFrameProgressed(camera, frameConfig, frameResult);

}

@Override

public void onFrameFinished(Camera camera, FrameConfig frameConfig, FrameResult frameResult) {

super.onFrameFinished(camera, frameConfig, frameResult);

FaceDetectionResult faceDetectionResult = frameResult.getFaceDetectionResult();

Face[] faces = faceDetectionResult.getFaces();

if (faces == null || faces.length == 0) {

lines = null;

surfaceProvider.invalidate();

return;

}

faceRect = faces[0].getFaceRect();

faceRect.rotateBy(Rect.RotationEnum.ROTATE_90);

float ax = faceRect.left;

float ay = faceRect.top;

float bx = faceRect.right;

float by = faceRect.top;

float cx = faceRect.right;

float cy = faceRect.bottom;

float dx = faceRect.left;

float dy = faceRect.bottom;

lines = new float[]{ax, ay, bx, by,

bx, by, cx, cy,

cx, cy, dx, dy,

dx, dy, ax, ay};

surfaceProvider.invalidate();

}

@Override

public void onFrameError(Camera camera, FrameConfig frameConfig, int errorCode, FrameResult frameResult) {

super.onFrameError(camera, frameConfig, errorCode, frameResult);

}

@Override

public void onCaptureTriggerStarted(Camera camera, int captureTriggerId, long firstFrameNumber) {

super.onCaptureTriggerStarted(camera, captureTriggerId, firstFrameNumber);

}

@Override

public void onCaptureTriggerFinished(Camera camera, int captureTriggerId, long lastFrameNumber) {

super.onCaptureTriggerFinished(camera, captureTriggerId, lastFrameNumber);

}

@Override

public void onCaptureTriggerInterrupted(Camera camera, int captureTriggerId) {

super.onCaptureTriggerInterrupted(camera, captureTriggerId);

}

}

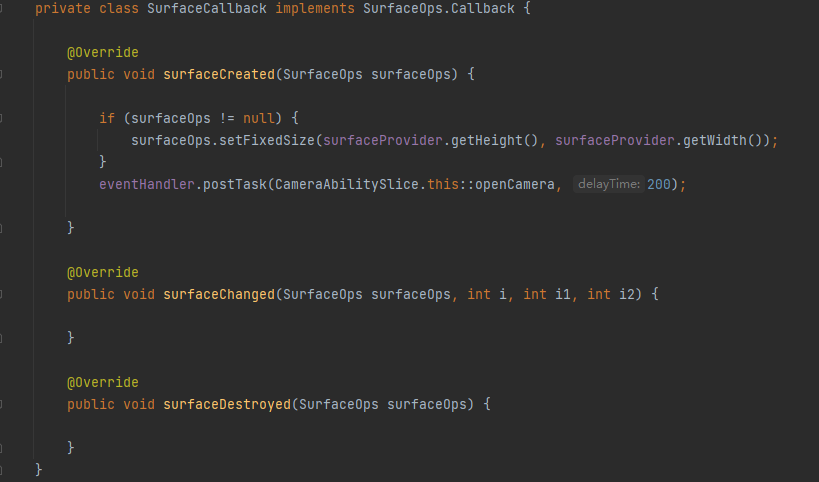

class SurfaceCallBack implements SurfaceOps.Callback {

@Override

public void surfaceCreated(SurfaceOps callbackSurfaceOps) {

if (callbackSurfaceOps != null) {

callbackSurfaceOps.setFixedSize(surfaceProvider.getHeight(), surfaceProvider.getWidth());

}

openCamera();

}

@Override

public void surfaceChanged(SurfaceOps callbackSurfaceOps, int format, int width, int height) {}

@Override

public void surfaceDestroyed(SurfaceOps callbackSurfaceOps) {}

}

private void showBigPic() {

Intent intent = new Intent();

Operation operation =

new Intent.OperationBuilder()

.withBundleName(getBundleName())

.withAbilityName(ImageAbility.class.getName())

.build();

intent.setOperation(operation);

if (targetFile != null) {

try {

intent.setParam(CAMERA_IMAGE_FILE_PATH, targetFile.getCanonicalPath());

} catch (IOException e) {

HiLog.error(TAG, "filePath is error");

}

}

startAbility(intent);

}

private AsrIntent setInitIntent() {

AsrIntent initIntent = new AsrIntent();

initIntent.setAudioSourceType(AsrIntent.AsrAudioSrcType.ASR_SRC_TYPE_PCM);

initIntent.setEngineType(AsrIntent.AsrEngineType.ASR_ENGINE_TYPE_LOCAL);

return initIntent;

}

private AsrIntent setStartIntent() {

AsrIntent asrIntent = new AsrIntent();

asrIntent.setVadEndWaitMs(VAD_END_WAIT_MS);

asrIntent.setVadFrontWaitMs(VAD_FRONT_WAIT_MS);

asrIntent.setTimeoutThresholdMs(TIMEOUT_DURATION);

return asrIntent;

}

class AudioCaptureRunnable implements Runnable {

@Override

public void run() {

byte[] buffers = new byte[BYTES_LENGTH];

audioCapturer.start();

while (isRecord) {

int ret = audioCapturer.read(buffers, 0, BYTES_LENGTH);

if (ret <= 0) {

HiLog.error(TAG, "======Error read data");

} else {

asrClient.writePcm(buffers, BYTES_LENGTH);

}

}

}

}

}

终于测试出来了,必须是“窗口透明+surfaceProvider不置顶”才能同时显示预览界面和框框,其他三种情况都不行,然后导致预览界面以外的部分变白色是因为addDrawTask里少了非空的判断和执行语句,再次感谢😉!

上面那个 surfaceProvider 是放在什么地方的,是跟 initSurface() 里的是同一个还是在 FrameStateCallback 新建的?

姓名: 张三

职位: 软件工程师

简介: 拥有超过10年的软件开发经验,擅长Java和Python编程。

可是这样的话,执行到surfaceProvider.invalidate();把surfaceProvider无效化之后就闪退了,报错:Attempt to update UI in non-UI thread。

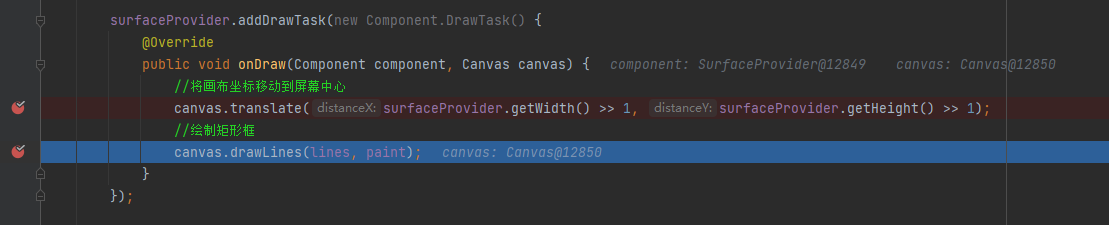

你debug看这个代码有没有走,再看下lines的值

canvas.translate(surfaceProvider.getWidth() >> 1, surfaceProvider.getHeight() >> 1);

canvas.drawLines(lines, paint);

这两行只在刚打开相机的时候走了,之后就一直在onFrameFinished得到脸部数据那里循环。

我的pinToZTop是这样设置的,其他没看出什么问题… 看下你的eventHandler初始化的地方

如果不将窗口设置为透明,则pinToZTop参数必须为true,否则会被遮挡

getWindow().setTransparent(true);

surfaceProvider = (SurfaceProvider) findComponentById(ResourceTable.Id_surface_provider);

surfaceProvider.pinToZTop(false);

欢迎开发小伙伴们进来帮帮楼主

针对帖子标题“HarmonyOS 鸿蒙Next 帧配置中设置人脸检测和对焦模式都没有反应”的问题,以下为专业解答:

在HarmonyOS鸿蒙Next系统中,若帧配置中的人脸检测和对焦模式设置无反应,可能原因及解决方案如下:

-

权限问题:确保应用已获取相机使用权限及人脸检测相关权限。检查应用权限设置,确保所有必要权限均已开启。

-

API调用错误:检查代码中API的调用方式是否正确。确认使用的API版本与鸿蒙系统版本兼容,且调用参数无误。

-

系统限制:部分鸿蒙系统版本可能对人脸检测和对焦模式有特定限制。查阅鸿蒙官方文档,了解当前系统版本的相关限制。

-

硬件支持:确认设备硬件支持人脸检测和对焦功能。部分低端设备可能不支持这些高级功能。

-

软件冲突:检查是否有其他应用或服务占用相机资源,导致设置无法生效。尝试关闭其他占用相机的应用后重新设置。

-

系统Bug:若以上均无误,可能是系统本身存在的Bug。关注鸿蒙官方更新,及时升级系统版本以修复可能存在的Bug。

如果问题依旧没法解决请联系官网客服,官网地址是:https://www.itying.com/category-93-b0.html,