Flutter语音识别与交互插件flutter_aiui的使用

Flutter语音识别与交互插件flutter_aiui的使用

Flutter插件flutter_aiui允许你在Android设备上使用讯飞的AIUI。

关键特性

- 语音识别

- 语义理解

- 语音合成

- 唤醒功能

配置

iOS

-

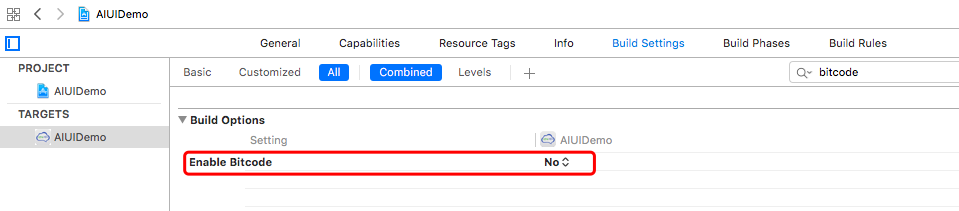

Bitcode设置

- Xcode 7 和 8 默认启用了Bitcode,而Bitcode需要项目依赖的所有类库都支持。目前AIUI SDK还不支持Bitcode,因此需要禁用该设置。在

Targets -> Build Settings中搜索Bitcode,并将其设置为NO。

- Xcode 7 和 8 默认启用了Bitcode,而Bitcode需要项目依赖的所有类库都支持。目前AIUI SDK还不支持Bitcode,因此需要禁用该设置。在

-

添加权限

-

在

Info.plist文件中添加以下权限:<key>NSMicrophoneUsageDescription</key> <string></string> <key>NSLocationUsageDescription</key> <string></string> <key>NSLocationAlwaysUsageDescription</key> <string></string> <key>NSContactsUsageDescription</key> <string></string>

-

Android

-

最小SDK版本

-

将

android/app/build.gradle文件中的minSdkVersion改为 19 或更高:android { ... defaultConfig { ... minSdkVersion 19 // 更改为19或更高版本 ... } ... }

-

-

权限设置

-

在

AndroidManifest.xml文件中添加以下权限:<uses-permission android:name="android.permission.RECORD_AUDIO" /> <uses-permission android:name="android.permission.INTERNET" /> <uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" /> <uses-permission android:name="android.permission.ACCESS_NETWORK_STATE"/> -

为了防止打包或生成APK时出现混淆,还需在

proguard.cfg文件中添加以下内容:-dontoptimize -keep class com.iflytek.**{*;} -keepattributes Signature

-

-

请求LegacyExternalStorage

-

在

AndroidManifest.xml文件中添加android:requestLegacyExternalStorage="true"到<application>标签中:<application ... android:requestLegacyExternalStorage="true"> ... </application>

-

-

复制资源文件

- 将从讯飞AIUI平台下载的

assets文件夹复制到<project root>/android/app/src/main目录下。

注意:由于Android 11不再允许直接访问

sdcard,官方配置文件中的资源路径会导致唤醒功能无法使用。因此,当前ivw的res_path参数需要动态生成。如果要使用唤醒功能,请从vtn.ini配置文件中删除res_path参数。删除后的vtn.ini内容如下:[auth] appid=xxxxxx [cae] # 是否开启降噪功能, 0为不开启,其他为开启,默认为开启 cae_enable = 1 input_audio_unit=2 [ivw] # 是否开启唤醒功能, 0为不开启,其他为开启,默认为不开启 ivw_enable = 1 - 将从讯飞AIUI平台下载的

使用

创建代理

要使用AIUI功能,首先需要创建一个AIUI代理。

final AiuiParams _params = AiuiParams(

appId: appId,

global: GlobalParams(scene: 'main_box'),

speech: SpeechParams(wakeupMode: WakeupMode.vtn),

);

await FlutterAiui().initAgent(_params);

注意:要使用唤醒功能,只需将 AiuiParams 的 speech 参数中的 wakeupMode 设置为 WakeupMode.vtn 并重新初始化代理。

销毁代理

FlutterAiui().destroyAgent();

添加监听器

使用监听器来监听AIUI事件。

FlutterAiui().addListener(AiuiEventListener(onResult: () => {}));

移除监听器

FlutterAiui().removeListener();

设置参数

FlutterAiui().setParams(paramsJson);

开始录音

FlutterAiui().startRecordAudio();

停止录音

FlutterAiui().stopRecordAudio();

识别文本

FlutterAiui().writeText('xxxxx');

开始语音合成

FlutterAiui().startTTS('xxxxxx');

结束语音合成

FlutterAiui().stopTTS();

示例代码

import 'dart:async';

import 'package:flutter/material.dart';

import 'package:flutter_aiui/flutter_aiui.dart';

import 'package:flutter_aiui_example/wave.dart';

/// AIUI平台上应用ID

const String appId = 'xxxxxxx';

void main() {

runApp(const MyApp());

}

enum FromType { user, aiui }

enum MsgType { text, voice }

class RawMessage<T> {

RawMessage({

required this.fromType,

required this.msgType,

required this.msgData,

this.msgVersion = 0,

this.cacheContent = '',

this.responseTime,

}) {

msgID = sMsgIDStore++;

}

static int sMsgIDStore = 0;

late int msgID;

int msgVersion;

int? responseTime;

FromType fromType;

MsgType msgType;

String cacheContent;

T msgData;

void versionUpdate() {

msgVersion++;

}

}

class MyApp extends StatelessWidget {

const MyApp({super.key});

[@override](/user/override)

Widget build(BuildContext context) {

return const MaterialApp(home: VoiceAssistantView());

}

}

/// 语音助手页面

class VoiceAssistantView extends StatefulWidget {

const VoiceAssistantView({super.key});

[@override](/user/override)

State<VoiceAssistantView> createState() => _VoiceAssistantViewState();

}

class _VoiceAssistantViewState extends State<VoiceAssistantView> {

late ScrollController _controller;

final AiuiParams _params = AiuiParams(

appId: appId,

global: GlobalParams(scene: 'main_box'),

speech: SpeechParams(wakeupMode: WakeupMode.vtn),

);

/// 是否开启唤醒模式

bool get _wakeUpEnabled => _params.speech.wakeupMode == WakeupMode.vtn;

/// 是否已唤醒

bool _isWakeup = false;

/// 是否正在录音

bool _isRecording = false;

/// 当前未结束的语音交互消息,更新语音消息的听写内容时使用

RawMessage? _appendVoiceMsg;

/// 当前消息列表

final List<RawMessage> _interactMsg = [];

/// 处理PGS听写(流式听写)的数组

final List<String?> _iATPGSStack = List.filled(256, null);

final List<String> _interResultStack = [];

DateTime _audioStartTime = DateTime.now();

/// 切换唤醒模式

Future<void> _switchWakeupMode(bool value) async {

if (value) {

_params.speech.wakeupMode = WakeupMode.vtn;

await FlutterAiui().initAgent(_params);

} else {

_params.speech.wakeupMode = WakeupMode.off;

await FlutterAiui().initAgent(_params);

}

setState(() {});

}

/// 更新消息

void _updateMessage(RawMessage message) {

message.versionUpdate();

setState(() {});

}

/// 添加消息

void _addMessage(RawMessage rawMessage) {

_interactMsg.add(rawMessage);

setState(() {});

}

/// 文本识别

Future<void> _writeText(String text) async {

await FlutterAiui().stopTTS();

if (_appendVoiceMsg != null) {

_interResultStack.clear();

_appendVoiceMsg = null;

}

await FlutterAiui().writeText(text);

_addMessage(

RawMessage<String>(

fromType: FromType.user,

msgType: MsgType.text,

msgData: text,

),

);

setState(() {});

}

/// 开始说话

Future<void> _startSpeak() async {

await FlutterAiui().stopTTS();

if (!_isRecording) {

await FlutterAiui().startRecordAudio();

_isRecording = true;

}

if (!_wakeUpEnabled) {

_beginAudio();

}

setState(() {});

}

/// 开始录音

void _beginAudio() {

_audioStartTime = DateTime.now();

if (_appendVoiceMsg != null) {

// 更新上一条未完成的语音消息内容

_updateMessage(_appendVoiceMsg!);

_appendVoiceMsg = null;

_interResultStack.clear();

}

for (int index = 0; index < _iATPGSStack.length; index++) {

_iATPGSStack[index] = null;

}

_appendVoiceMsg = RawMessage<int>(

fromType: FromType.user,

msgType: MsgType.voice,

msgData: 0,

);

_addMessage(_appendVoiceMsg!);

}

/// 停止说话

Future<void> _endSpeak() async {

if (_isRecording) {

await FlutterAiui().stopRecordAudio();

_isRecording = false;

}

if (!_wakeUpEnabled) {

_endAudio();

}

setState(() {});

}

/// 停止录音

void _endAudio() {

if (_appendVoiceMsg != null) {

final Duration diff = DateTime.now().difference(_audioStartTime);

_appendVoiceMsg!.msgData = diff.inSeconds;

_updateMessage(_appendVoiceMsg!);

}

}

/// 解析听写结果更新当前语音消息的听写内容

void _processIatResult(IatResult result, int responseTime) {

final Pgs? pgs = result.pgs;

String voiceIAT = '';

if (pgs == null) {

if (result.text.isEmpty) {

return;

}

if (_appendVoiceMsg?.cacheContent.isNotEmpty == true) {

voiceIAT = _appendVoiceMsg!.cacheContent;

}

voiceIAT += result.text;

} else {

_iATPGSStack[result.sentence] = result.text;

// pgs结果两种模式rpl和apd模式(替换和追加模式)

if (pgs == Pgs.rpl) {

final int start = result.rg![0];

final int end = result.rg![1];

for (int index = start; index <= end; index++) {

_iATPGSStack[index] = null;

}

}

// 汇总stack经过操作后的剩余的有效结果信息

String pgsResult = '';

for (int index = 0; index < _iATPGSStack.length; index++) {

if (_iATPGSStack[index]?.isNotEmpty != true) {

continue;

}

pgsResult += _iATPGSStack[index]!;

// 如果是最后一条听写结果,则清空stack便于下次使用

if (result.lastSentence) {

_iATPGSStack[index] = null;

}

}

voiceIAT =

_interResultStack.fold('', (pre, text) => pre + text) + pgsResult;

if (result.lastSentence) {

_interResultStack.add(pgsResult);

}

}

if (voiceIAT.isNotEmpty) {

_appendVoiceMsg!.cacheContent = voiceIAT;

_appendVoiceMsg!.responseTime = responseTime;

_updateMessage(_appendVoiceMsg!);

}

}

/// 解析语义理解数据

void _processNlpResult(NlpResult result, int responseTime) {

/// 拒识(rc = 4)结果处理

if (result.rc == Rc.cannotHandle && result.answer == null) {

const String text = '你好,我不懂你的意思\n\n在后台添加更多技能让我变得更聪明吧';

result.answer ??= const Answer(text: text);

FlutterAiui().startTTS(text);

}

final RawMessage rawMessage = RawMessage<NlpResult>(

fromType: FromType.aiui,

msgType: MsgType.text,

msgData: result,

responseTime: responseTime,

);

_addMessage(rawMessage);

}

/// 处理错误

void _onError(int errorCode, String errorMessage) {

String message;

// AIUI网络异常,不影响交互,可以作为排查问题的线索和依据

if (errorCode >= 10200 && errorCode <= 10215) {

debugPrint('AIUI Error: $errorCode');

return;

}

switch (errorCode) {

case 10120:

message = '网络有点问题 :(,请检查你的网络';

break;

case 11200:

message = '11200 错误 \n小娟发音人权限未开启,请在控制台应用配置下启用语音合成后等待一分钟生效后再重启应用';

break;

case 20006:

message = '录音启动失败 :(,请检查是否有其他应用占用录音';

break;

case 600002:

message = '唤醒 600002 错误\n 唤醒配置vtn.ini路径错误,请检查配置路径';

break;

case 600100:

message = '唤醒 600100 错误\n 唤醒资源文件路径错误,请检查资源路径';

break;

case 600022:

message = '唤醒 600022 错误\n 唤醒装机授权不足,请联系商务开通';

break;

default:

message = '$errorCode 错误:$errorMessage';

break;

}

final RawMessage rawMessage = RawMessage<String>(

fromType: FromType.aiui,

msgType: MsgType.text,

msgData: message,

);

_addMessage(rawMessage);

}

/// 唤醒回调

void _onWakeup(WakeupType type, String? message) {

debugPrint('AIUI Wakeup');

if (_wakeUpEnabled) {

// 唤醒自动停止播放

FlutterAiui().stopTTS();

_beginAudio();

setState(() {

_isWakeup = true;

});

}

}

/// 唤醒回调

void _onSleep(SleepType type) {

debugPrint('AIUI Sleep');

// 休眠结束语音

if (_isWakeup) {

_endAudio();

setState(() {

_isWakeup = false;

});

}

}

Future<void> _init() async {

await FlutterAiui().initAgent(_params);

FlutterAiui().addListener(

AiuiEventListener(

onError: _onError,

onVad: (event, [volume]) => debugPrint('Vad event:$event'),

onIatResult: _processIatResult,

onNlpResult: _processNlpResult,

onRecordStart: () {

setState(() {

_isRecording = true;

});

},

onRecordStop: () {

setState(() {

_isRecording = false;

});

},

onWakeup: _onWakeup,

onSleep: _onSleep,

),

);

}

[@override](/user/override)

void initState() {

super.initState();

_init();

_controller = ScrollController();

}

[@override](/user/override)

void dispose() {

FlutterAiui().destroyAgent();

_controller.dispose();

super.dispose();

}

[@override](/user/override)

Widget build(BuildContext context) {

Future.delayed(const Duration(milliseconds: 50), () {

_controller.animateTo(

_controller.position.maxScrollExtent - 20,

duration: const Duration(milliseconds: 500),

curve: Curves.linear,

);

});

final Widget list = ListView.builder(

padding: const EdgeInsets.all(12.0),

itemBuilder: (context, index) =>

_DialogueItem(message: _interactMsg[index]),

controller: _controller,

physics: const BouncingScrollPhysics(),

itemCount: _interactMsg.length,

);

final Widget bottom = _wakeUpEnabled

? Container(

color: Theme.of(context).colorScheme.surfaceTint.withOpacity(0.05),

height: 48.0,

width: double.infinity,

child: _isWakeup

? const Wave(

configs: [

WaveConfig(

amplitudes: 15,

frequency: 2,

gradients: [

Colors.white,

Colors.white54,

],

),

],

)

: Center(

child: Text(

'快说小矿小矿唤醒我吧',

style: Theme.of(context).textTheme.titleMedium,

),

),

)

: _ActionBar(_writeText, _startSpeak, _endSpeak);

return Scaffold(

appBar: AppBar(title: const Text('AIUI Demo')),

backgroundColor: Theme.of(context).colorScheme.surface,

drawer: Drawer(

child: SafeArea(

child: SwitchListTile(

title: const Text('唤醒模式'),

value: _wakeUpEnabled,

onChanged: _switchWakeupMode,

),

),

),

body: Column(

crossAxisAlignment: CrossAxisAlignment.stretch,

children: [Expanded(child: list), bottom],

),

);

}

}

enum DialogueType { question, answer, line, bar, pie, table }

/// 语音助手会话框组件

class _DialogueItem extends StatelessWidget {

const _DialogueItem({required this.message});

final RawMessage message;

static const EdgeInsetsGeometry padding =

EdgeInsets.symmetric(vertical: 8.0, horizontal: 12.0);

static const BorderRadius userBorderRadius = BorderRadius.only(

topLeft: Radius.circular(8.0),

topRight: Radius.circular(8.0),

bottomLeft: Radius.circular(8.0),

);

[@override](/user/override)

Widget build(BuildContext context) {

if (message.fromType == FromType.user) {

if (message.msgType == MsgType.text) {

return _buildQuestion(context);

}

return _buildVoice(Theme.of(context).colorScheme);

}

return _buildResultText(context);

}

/// 问题

Widget _buildQuestion(BuildContext context) {

final ColorScheme scheme = Theme.of(context).colorScheme;

final TextStyle? textStyle = Theme.of(context)

.textTheme

.bodyMedium

?.copyWith(color: scheme.onPrimary);

return Align(

alignment: Alignment.centerRight,

child: Card(

shape: const RoundedRectangleBorder(borderRadius: userBorderRadius),

color: scheme.primary,

child: Padding(

padding: padding,

child: Text(message.msgData, style: textStyle),

),

),

);

}

/// 语音

Widget _buildVoice(ColorScheme colorScheme) {

return Align(

alignment: Alignment.centerRight,

child: Card(

color: colorScheme.primary,

shape: const RoundedRectangleBorder(borderRadius: userBorderRadius),

child: Padding(

padding: const EdgeInsets.all(8.0),

child: Column(

crossAxisAlignment: CrossAxisAlignment.end,

children: [

Row(

mainAxisSize: MainAxisSize.min,

children: [

Icon(

Icons.volume_up_rounded,

color: colorScheme.outline,

size: 18,

),

const SizedBox(width: 4.0),

Text(

'${message.msgData}s',

style: TextStyle(color: colorScheme.outline, fontSize: 12),

),

],

),

if (message.cacheContent.isNotEmpty)

Text(

message.cacheContent,

style: TextStyle(color: colorScheme.onPrimary),

)

],

),

),

),

);

}

/// 文字类型结果

Widget _buildResultText(BuildContext context) {

final ThemeData theme = Theme.of(context);

final Color color = theme.colorScheme.surface;

const BorderRadius borderRadius = BorderRadius.only(

bottomLeft: Radius.circular(8.0),

topRight: Radius.circular(8.0),

bottomRight: Radius.circular(8.0),

);

final TextStyle? textStyle = theme.textTheme.bodyLarge;

const ShapeBorder shape =

RoundedRectangleBorder(borderRadius: borderRadius);

return Row(

crossAxisAlignment: CrossAxisAlignment.start,

children: [

const CircleAvatar(radius: 20, child: Icon(Icons.face)),

const SizedBox(width: 8.0),

Expanded(

child: Align(

alignment: Alignment.centerLeft,

child: Card(

shape: shape,

color: color,

child: Padding(

padding: padding,

child: Text(

message.msgData is String

? message.msgData

: (message.msgData as NlpResult).answer!.text,

style: textStyle,

),

),

),

),

)

],

);

}

}

/// 操作栏

class _ActionBar extends StatefulWidget {

const _ActionBar(this.onSearch, this.onSpeakTapDown, this.onSpeakTapUp);

final ValueChanged<String> onSearch;

final VoidCallback onSpeakTapDown;

final VoidCallback onSpeakTapUp;

[@override](/user/override)

State<_ActionBar> createState() => _ActionBarState();

}

class _ActionBarState extends State<_ActionBar>

with SingleTickerProviderStateMixin {

late TextEditingController _editingController;

late FocusNode _focusNode;

[@override](/user/override)

void initState() {

super.initState();

_editingController = TextEditingController();

_focusNode = FocusNode();

}

[@override](/user/override)

void dispose() {

super.dispose();

_editingController.dispose();

_focusNode.unfocus();

}

[@override](/user/override)

Widget build(BuildContext context) {

return Padding(

padding: const EdgeInsets.all(12.0),

child: Row(

children: [Expanded(child: _buildTextField()), _buildRecordButton()],

),

);

}

/// 输入框

Widget _buildTextField() {

const InputBorder border = OutlineInputBorder(

borderRadius: BorderRadius.all(Radius.circular(100)),

borderSide: BorderSide(width: 1.5),

);

final Widget button = AnimatedOpacity(

opacity: _editingController.text.isEmpty ? 0 : 1,

duration: const Duration(milliseconds: 100),

child: IconButton(

onPressed: () {

if (_editingController.text.isNotEmpty) {

widget.onSearch(_editingController.text);

// _editingController.clear();

}

},

icon: const Icon(Icons.send),

),

);

return Padding(

padding: const EdgeInsets.only(right: 16.0, left: 8.0),

child: TextField(

onChanged: (val) {

if (mounted) {

setState(() {});

}

},

focusNode: _focusNode,

controller: _editingController,

decoration: InputDecoration(

contentPadding: const EdgeInsets.symmetric(horizontal: 16),

hintText: '输入你想要搜索的内容',

border: border,

enabledBorder: border,

focusedBorder: border,

suffixIcon: button,

),

),

);

}

/// 录音按钮

Widget _buildRecordButton() {

final ColorScheme theme = Theme.of(context).colorScheme;

return GestureDetector(

onTapDown: (TapDownDetails detail) => widget.onSpeakTapDown(),

onTapUp: (TapUpDetails detail) => widget.onSpeakTapUp(),

onTapCancel: widget.onSpeakTapUp,

child: Material(

shape: const CircleBorder(),

color: theme.inverseSurface,

child: Padding(

padding: const EdgeInsets.all(12.0),

child: Icon(

Icons.settings_voice_outlined,

color: theme.onInverseSurface,

),

),

),

);

}

}

更多关于Flutter语音识别与交互插件flutter_aiui的使用的实战教程也可以访问 https://www.itying.com/category-92-b0.html

更多关于Flutter语音识别与交互插件flutter_aiui的使用的实战系列教程也可以访问 https://www.itying.com/category-92-b0.html

flutter_aiui 是一个用于 Flutter 的语音识别与交互插件,它集成了科大讯飞的 AIUI(Artificial Intelligence User Interface)技术,支持语音识别、语音合成、语义理解等功能。使用该插件,你可以轻松地在 Flutter 应用中实现语音交互功能。

以下是使用 flutter_aiui 插件的基本步骤:

1. 添加依赖

首先,在 pubspec.yaml 文件中添加 flutter_aiui 插件的依赖:

dependencies:

flutter:

sdk: flutter

flutter_aiui: ^0.0.1 # 请根据实际情况使用最新版本

然后运行 flutter pub get 来获取依赖。

2. 配置权限

在 AndroidManifest.xml 和 Info.plist 中添加必要的权限。

AndroidManifest.xml:

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.INTERNET" />

Info.plist (iOS):

<key>NSMicrophoneUsageDescription</key>

<string>我们需要访问麦克风以进行语音识别</string>

3. 初始化插件

在你的 Dart 代码中,初始化 flutter_aiui 插件。

import 'package:flutter_aiui/flutter_aiui.dart';

void main() async {

WidgetsFlutterBinding.ensureInitialized();

// 初始化 AIUI

await FlutterAiui.init(

appId: 'your_app_id', // 替换为你的 App ID

apiKey: 'your_api_key', // 替换为你的 API Key

);

runApp(MyApp());

}

4. 使用语音识别功能

你可以使用 FlutterAiui 提供的 API 来进行语音识别。

import 'package:flutter/material.dart';

import 'package:flutter_aiui/flutter_aiui.dart';

class VoiceRecognitionPage extends StatefulWidget {

@override

_VoiceRecognitionPageState createState() => _VoiceRecognitionPageState();

}

class _VoiceRecognitionPageState extends State<VoiceRecognitionPage> {

String _recognizedText = '';

Future<void> _startVoiceRecognition() async {

try {

String result = await FlutterAiui.startVoiceRecognition();

setState(() {

_recognizedText = result;

});

} catch (e) {

print('Error: $e');

}

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: Text('语音识别'),

),

body: Center(

child: Column(

mainAxisAlignment: MainAxisAlignment.center,

children: [

Text('识别结果: $_recognizedText'),

SizedBox(height: 20),

ElevatedButton(

onPressed: _startVoiceRecognition,

child: Text('开始语音识别'),

),

],

),

),

);

}

}

5. 使用语音合成功能

你还可以使用 flutter_aiui 进行语音合成。

Future<void> _textToSpeech(String text) async {

await FlutterAiui.textToSpeech(text);

}

6. 处理语义理解

flutter_aiui 还支持语义理解功能,你可以通过调用 FlutterAiui.understandText 方法来获取语义理解结果。

Future<void> _understandText(String text) async {

String result = await FlutterAiui.understandText(text);

print('语义理解结果: $result');

}

7. 释放资源

在应用退出时,释放 flutter_aiui 的资源。

@override

void dispose() {

FlutterAiui.release();

super.dispose();

}

8. 处理错误

在使用过程中,可能会遇到各种错误,建议在调用 API 时进行错误处理。

try {

String result = await FlutterAiui.startVoiceRecognition();

setState(() {

_recognizedText = result;

});

} catch (e) {

print('Error: $e');

}