HarmonyOS鸿蒙Next中MindSpore Lite Kit(昇思推理框架服务)指定nnrt设备推理报错

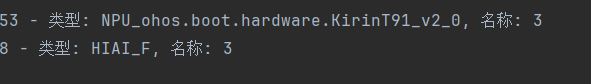

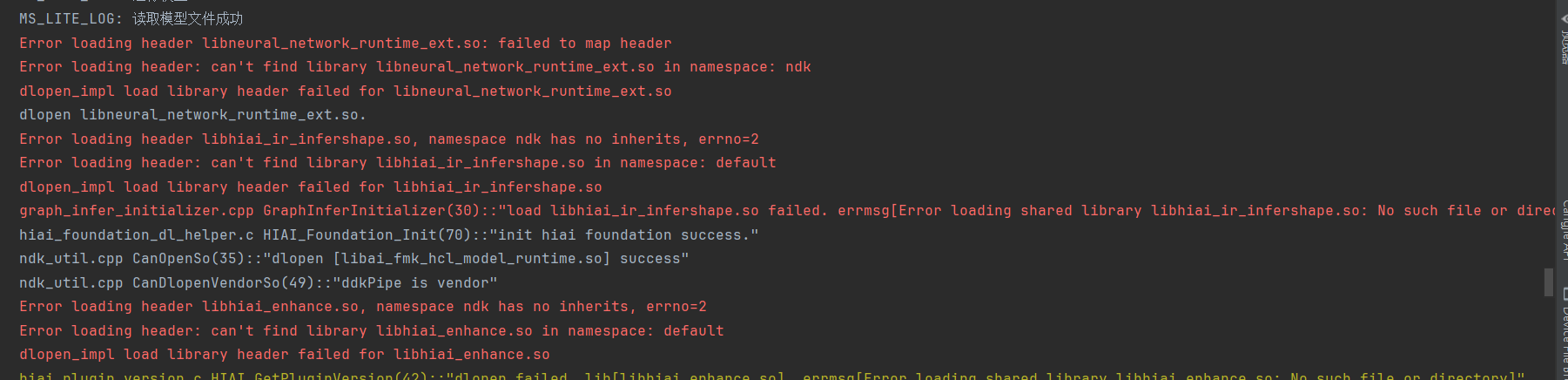

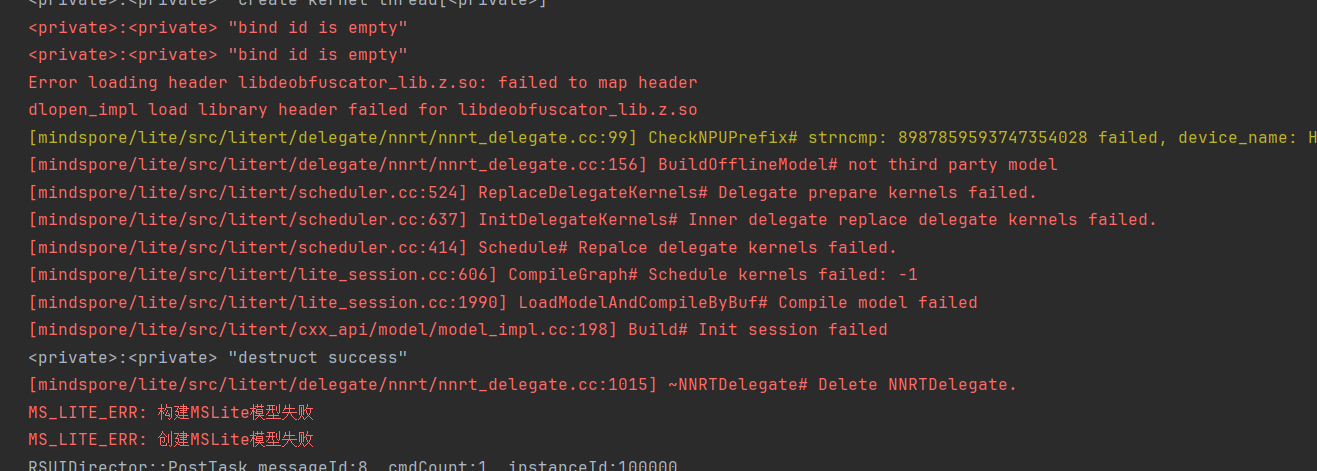

HarmonyOS鸿蒙Next中MindSpore Lite Kit(昇思推理框架服务)指定nnrt设备推理报错 如题,无论是使用Arkts MindSpore的API还是C++ MindSpore API,指定为nnrt加速推理设备时就会报错,使用纯cpu就可以正常输出结果,报错内容如下,

以下为日志输出错误

希望可以帮我解答。

以下是完整cpp代码:来源于https://gitee.com/harmonyos_samples/MindSporeLiteCpp示例仓库,代码手动添加nnrt相关代码,已用红色标明

#include <iostream>

#include <sstream>

#include <stdlib.h>

#include <hilog/log.h>

#include <rawfile/raw_file_manager.h>

#include <mindspore/types.h>

#include <mindspore/model.h>

#include <mindspore/context.h>

#include <mindspore/status.h>

#include <mindspore/tensor.h>

#include "napi/native_api.h"

#define LOGI(...) ((void)OH_LOG_Print(LOG_APP, LOG_INFO, LOG_DOMAIN, "[MSLiteNapi]", __VA_ARGS__))

#define LOGD(...) ((void)OH_LOG_Print(LOG_APP, LOG_DEBUG, LOG_DOMAIN, "[MSLiteNapi]", __VA_ARGS__))

#define LOGW(...) ((void)OH_LOG_Print(LOG_APP, LOG_WARN, LOG_DOMAIN, "[MSLiteNapi]", __VA_ARGS__))

#define LOGE(...) ((void)OH_LOG_Print(LOG_APP, LOG_ERROR, LOG_DOMAIN, "[MSLiteNapi]", __VA_ARGS__))

void *ReadModelFile(NativeResourceManager *nativeResourceManager, const std::string &modelName, size_t *modelSize) {

auto rawFile = OH_ResourceManager_OpenRawFile(nativeResourceManager, modelName.c_str());

if (rawFile == nullptr) {

LOGE("MS_LITE_ERR: Open model file failed");

return nullptr;

}

long fileSize = OH_ResourceManager_GetRawFileSize(rawFile);

void *modelBuffer = malloc(fileSize);

if (modelBuffer == nullptr) {

LOGE("MS_LITE_ERR: OH_ResourceManager_ReadRawFile failed");

return nullptr;

}

int ret = OH_ResourceManager_ReadRawFile(rawFile, modelBuffer, fileSize);

if (ret == 0) {

LOGI("MS_LITE_LOG: OH_ResourceManager_ReadRawFile failed");

OH_ResourceManager_CloseRawFile(rawFile);

return nullptr;

}

OH_ResourceManager_CloseRawFile(rawFile);

*modelSize = fileSize;

return modelBuffer;

}

void DestroyModelBuffer(void **buffer) {

if (buffer == nullptr) {

return;

}

free(*buffer);

*buffer = nullptr;

}

OH_AI_ModelHandle CreateMSLiteModel(void *modelBuffer, size_t modelSize) {

// Set executing context for model.

auto context = OH_AI_ContextCreate();

if (context == nullptr) {

DestroyModelBuffer(&modelBuffer);

LOGE("MS_LITE_ERR: Create MSLite context failed.\n");

return nullptr;

}

// 2. 如果启用NPU,则优先尝试创建NPU设备

auto npu_device_info = OH_AI_CreateNNRTDeviceInfoByType(OH_AI_NNRTDEVICE_ACCELERATOR);

if (npu_device_info == nullptr) {

DestroyModelBuffer(&modelBuffer);

OH_AI_ContextDestroy(&context);

LOGE("MS_LITE_ERR: 创建NPU设备信息失败");

return nullptr;

}

// 添加获取所有NNRt设备描述信息的代码

size_t device_count = 0;

NNRTDeviceDesc* deviceDescs = OH_AI_GetAllNNRTDeviceDescs(&device_count);

if (deviceDescs != NULL) {

for (size_t i = 0; i < device_count; ++i) {

NNRTDeviceDesc* desc = OH_AI_GetElementOfNNRTDeviceDescs(deviceDescs, i);

if (desc != NULL) {

// 获取设备信息

size_t deviceId = OH_AI_GetDeviceIdFromNNRTDeviceDesc(desc);

const char* deviceName = OH_AI_GetNameFromNNRTDeviceDesc(desc);

OH_AI_NNRTDeviceType deviceType = OH_AI_GetTypeFromNNRTDeviceDesc(desc);

// 打印设备信息

LOGI("MS_LITE_LOG: 设备 %zu - 类型: %s, 名称: %u", deviceId, deviceName, deviceType);

}

}

// 释放设备描述信息数组

OH_AI_DestroyAllNNRTDeviceDescs(&deviceDescs);

} else {

LOGW("MS_LITE_WARN: 无法获取NNRt设备描述信息");

}

// 设置NPU高性能模式

OH_AI_DeviceInfoSetPerformanceMode(npu_device_info, OH_AI_PERFORMANCE_HIGH);

// 将NPU设备添加到上下文

OH_AI_ContextAddDeviceInfo(context, npu_device_info);

LOGI("MS_LITE_LOG: 使用NPU设备进行推理");

auto cpu_device_info = OH_AI_DeviceInfoCreate(OH_AI_DEVICETYPE_CPU);

OH_AI_DeviceInfoSetEnableFP16(cpu_device_info, true);

OH_AI_ContextAddDeviceInfo(context, cpu_device_info);

// Create model

auto model = OH_AI_ModelCreate();

if (model == nullptr) {

DestroyModelBuffer(&modelBuffer);

LOGE("MS_LITE_ERR: Allocate MSLite Model failed.\n");

return nullptr;

}

// Build model object

auto build_ret = OH_AI_ModelBuild(model, modelBuffer, modelSize, OH_AI_MODELTYPE_MINDIR, context);

DestroyModelBuffer(&modelBuffer);

if (build_ret != OH_AI_STATUS_SUCCESS) {

OH_AI_ModelDestroy(&model);

LOGE("MS_LITE_ERR: Build MSLite model failed.\n");

return nullptr;

}

LOGI("MS_LITE_LOG: Build MSLite model success.\n");

return model;

}

constexpr int K_NUM_PRINT_OF_OUT_DATA = 20;

int FillInputTensor(OH_AI_TensorHandle input, std::vector<float> input_data) {

if (OH_AI_TensorGetDataType(input) == OH_AI_DATATYPE_NUMBERTYPE_FLOAT32) {

float *data = (float *)OH_AI_TensorGetMutableData(input);

for (size_t i = 0; i < OH_AI_TensorGetElementNum(input); i++) {

data[i] = input_data[i];

}

return OH_AI_STATUS_SUCCESS;

} else {

return OH_AI_STATUS_LITE_ERROR;

}

}

int RunMSLiteModel(OH_AI_ModelHandle model, std::vector<float> input_data) {

// Set input data for model.

auto inputs = OH_AI_ModelGetInputs();

auto ret = FillInputTensor(inputs.handle_list[0], input_data);

if (ret != OH_AI_STATUS_SUCCESS) {

LOGE("MS_LITE_ERR: RunMSLiteModel set input error.\n");

return OH_AI_STATUS_LITE_ERROR;

}

// Get model output.

auto outputs = OH_AI_ModelGetOutputs();

// Predict model.

auto predict_ret = OH_AI_ModelPredict(model, inputs, &outputs, nullptr, nullptr);

if (predict_ret != OH_AI_STATUS_SUCCESS) {

OH_AI_ModelDestroy(&model);

LOGE("MS_LITE_ERR: MSLite Predict error.\n");

return OH_AI_STATUS_LITE_ERROR;

}

LOGI("MS_LITE_LOG: Run MSLite model Predict success.\n");

// Print output tensor data.

LOGI("MS_LITE_LOG: Get model outputs:\n");

for (size_t i = 0; i < outputs.handle_num; i++) {

auto tensor = outputs.handle_list[i];

LOGI("MS_LITE_LOG: - Tensor %d name is: %s.\n", static_cast<int>(i), OH_AI_TensorGetName(tensor));

LOGI("MS_LITE_LOG: - Tensor %d size is: %d.\n", static_cast<int>(i), (int)OH_AI_TensorGetDataSize(tensor));

LOGI("MS_LITE_LOG: - Tensor data is:\n");

auto out_data = reinterpret_cast<const float *>(OH_AI_TensorGetData(tensor));

std::stringstream outStr;

for (int i = 0; (i < OH_AI_TensorGetElementNum(tensor)) && (i <= K_NUM_PRINT_OF_OUT_DATA); i++) {

outStr << out_data[i] << " ";

}

LOGI("MS_LITE_LOG: %s", outStr.str().c_str());

}

return OH_AI_STATUS_SUCCESS;

}

static napi_value RunDemo(napi_env env, napi_callback_info info) {

LOGI("MS_LITE_LOG: Enter runDemo()");

napi_value error_ret;

napi_create_int32(env, -1, &error_ret);

size_t argc = 2;

napi_value argv[2] = {nullptr};

napi_get_cb_info(env, info, &argc, argv, nullptr, nullptr);

bool isArray = false;

napi_is_array(env, argv[0], &isArray);

uint32_t length = 0;

napi_get_array_length(env, argv[0], &length);

LOGI("MS_LITE_LOG: argv array length = %d", length);

std::vector<float> input_data;

double param = 0;

for (int i = 0; i < length; i++) {

napi_value value;

napi_get_element(env, argv[0], i, &value);

napi_get_value_double(env, value, ¶m);

input_data.push_back(static_cast<float>(param));

}

std::stringstream outstr;

for (int i = 0; i < K_NUM_PRINT_OF_OUT_DATA; i++) {

outstr << input_data[i] << " ";

}

LOGI("MS_LITE_LOG: input_data = %s", outstr.str().c_str());

// Read model file

const std::string& modelName = "mobilenetv2.ms";

LOGI("MS_LITE_LOG: Run model: %s", modelName.c_str());

size_t modelSize;

auto resourcesManager = OH_ResourceManager_InitNativeResourceManager(env, argv[1]);

auto modelBuffer = ReadModelFile(resourcesManager, modelName, &modelSize);

if (modelBuffer == nullptr) {

LOGE("MS_LITE_ERR: Read model failed");

return error_ret;

}

LOGI("MS_LITE_LOG: Read model file success");

auto model = CreateMSLiteModel(modelBuffer, modelSize);

if (model == nullptr) {

OH_AI_ModelDestroy(&model);

LOGE("MS_LITE_ERR: MSLiteFwk Build model failed.\n");

return error_ret;

}

int ret = RunMSLiteModel(model, input_data);

if (ret != OH_AI_STATUS_SUCCESS) {

OH_AI_ModelDestroy(&model);

LOGE("MS_LITE_ERR: RunMSLiteModel failed.\n");

return error_ret;

}

napi_value out_data;

napi_create_array(env, &out_data);

auto outputs = OH_AI_ModelGetOutputs(model);

OH_AI_TensorHandle output_0 = outputs.handle_list[0];

float *output0Data = reinterpret_cast<float *>(OH_AI_TensorGetMutableData(output_0));

for (size_t i = 0; i < OH_AI_TensorGetElementNum(output_0); i++) {

napi_value element;

napi_create_double(env, static_cast<double>(output0Data[i]), &element);

napi_set_element(env, out_data, i, element);

}

OH_AI_ModelDestroy(&model);

LOGI("MS_LITE_LOG: Exit runDemo()");

return out_data;

}

EXTERN_C_START

static napi_value Init(napi_env env, napi_value exports) {

napi_property_descriptor desc[] = {

{"runDemo", nullptr, RunDemo, nullptr, nullptr, nullptr, napi_default, nullptr}

};

napi_define_properties(env, exports, sizeof(desc) / sizeof(desc[0]), desc);

return exports;

}

EXTERN_C_END

static napi_module demoModule = {

.nm_version = 1,

.nm_flags = 0,

.nm_filename = nullptr,

.nm_register_func = Init,

.nm_modname = "entry",

.nm_priv = ((void*)0),

.reserved = {0},

};

extern "C" __attribute__((constructor))

void RegisterEntryModule(void) {

napi_module_register(&demoModule);

}

Cmake.txt文件:

# 最小 CMake 版本

cmake_minimum_required(VERSION 3.5.0)

project(MindSporeLiteCpp)

# 设置项目根路径

set(NATIVERENDER_ROOT_PATH ${CMAKE_CURRENT_SOURCE_DIR})

if(DEFINED PACKAGE_FIND_FILE)

include(${PACKAGE_FIND_FILE})

endif()

# 包含目录

include_directories(

${NATIVERENDER_ROOT_PATH}

${NATIVERENDER_ROOT_PATH}/include

${HMOS_SDK_NATIVE}/sysroot/usr/lib

)

set(NATIVERENDER_ROOT_PATH ${CMAKE_CURRENT_SOURCE_DIR})

include_directories(${NATIVERENDER_ROOT_PATH}

${NATIVERENDER_ROOT_PATH}/include)

# 添加本地库

add_library(entry SHARED napi_init.cpp)

# 链接库

target_link_libraries(entry PUBLIC

libace_napi.z.so

mindspore_lite_ndk

hilog_ndk.z

rawfile.z

ace_napi.z

libmindspore_lite_ndk.so

libneural_network_core.so

libneural_network_runtime.so

)

更多关于HarmonyOS鸿蒙Next中MindSpore Lite Kit(昇思推理框架服务)指定nnrt设备推理报错的实战教程也可以访问 https://www.itying.com/category-93-b0.html

开发者你好,当前HarmonyOSNext支持CPU和NNRT进行推理,在使用NNRT推理时,可能由于设置parallelEnable=true导致build失败。若非此原因,还望补充更多信息以便定位。

更多关于HarmonyOS鸿蒙Next中MindSpore Lite Kit(昇思推理框架服务)指定nnrt设备推理报错的实战系列教程也可以访问 https://www.itying.com/category-93-b0.html

这个需要在什么位置设置,

你好,问答贴我已重新修改,完整cpp文件见上文,nnrt相关代码已用红色标明,请帮我分析错误所在。

好的,还请耐心等待,我们有了定位结论之后会第一时间告知您。

这个是官网提供的源码,看一下是否可以解决您的问题 https://gitee.com/harmonyos_samples/MindSporeLiteArkTS

解决不了,我的需求是要用nnrt进行推理模型。

在HarmonyOS鸿蒙Next中使用MindSpore Lite Kit进行推理时,如果指定nnrt设备报错,可能原因包括:

- 设备驱动未正确安装或版本不兼容;

- MindSpore Lite版本与nnrt设备不匹配;

- 模型文件未适配nnrt设备。

建议检查设备驱动、框架版本及模型适配性,确保环境配置正确。