HarmonyOS鸿蒙Next中华为在线语音识别转文字接口支持多少种语言呀?有文档吗?个人开发者可以用吗?有没有ArkTS的可以参考的文档

HarmonyOS鸿蒙Next中华为在线语音识别转文字接口支持多少种语言呀?有文档吗?个人开发者可以用吗?有没有ArkTS的可以参考的文档 我记得华为有一个在线语音识别转文字接口,支持多少种语言呀?有文档吗?个人开发者可以用吗? 有没有Arkts的可以参考的文档

开发者您好:

目前语音识别转文字,目前仅支持离线模式,支持的语言是中文普通话,个人开发者可以接入。具体参考文档:语音识别-Core Speech Kit(基础语音服务)-AI - 华为HarmonyOS开发者

更多关于HarmonyOS鸿蒙Next中华为在线语音识别转文字接口支持多少种语言呀?有文档吗?个人开发者可以用吗?有没有ArkTS的可以参考的文档的实战系列教程也可以访问 https://www.itying.com/category-93-b0.html

背景知识:

首先我们需要识别语音转文本使用到了两个鸿蒙的功能,第一个是录音获取音频流,第二个是语音流识别工具

第一个使用:AudioCapturer开发音频录制功能

第二个使用:Core Speech Kit(基础语音服务)- 语音识别

问题解决:

代码实例:

import { speechRecognizer } from '@kit.CoreSpeechKit';

import { BusinessError } from '@ohos.base';

import { audioCapturerUtils } from '../utils/AudioCapturerUtils';

import { emitter } from '@kit.BasicServicesKit'

//1、声明一个语音识别引擎

let asrEngine: speechRecognizer.SpeechRecognitionEngine;

// 设置创建引擎参数

let extraParams: Record<string, Object> = { "locate": "CN", "recognizerMode": "short" }

let initParamsInfo: speechRecognizer.CreateEngineParams = {

language: 'zh-CN',

online: 1,

extraParams: extraParams

};

@Entry

@Component

struct AudioPage {

@State message: string = 'Hello World';

@State recognizeText: string = ""

// 设置查询相关的参数

languageQuery: speechRecognizer.LanguageQuery = {

sessionId: '123456'

};

aboutToAppear(): void {

// 设置回调

// 调用createEngine方法

speechRecognizer.createEngine(initParamsInfo, (err: BusinessError, speechRecognitionEngine:

speechRecognizer.SpeechRecognitionEngine) => {

if (!err) {

// 2、获取创建引擎的实例

asrEngine = speechRecognitionEngine;

asrEngine.setListener(this.listener());

} else {

console.error(`Failed to create engine. Code: ${err.code}, message: ${err.message}.`);

}

});

//8、定义一个接收到识别数据的回调

let callback: Callback<emitter.EventData> = (eventData: emitter.EventData) => {

console.info(`eventData: ${JSON.stringify(eventData)}`);

this.recognizeText = eventData['data']+""

}

//监听回调

emitter.on("recognizeText",callback)

}

//3、构建识别监听回调

listener(): speechRecognizer.RecognitionListener {

// 创建回调对象

let setListener: speechRecognizer.RecognitionListener = {

///5、开始识别成功回调

async onStart(sessionId: string, eventMessage: string) {

console.info(`onStart, sessionId: ${sessionId} eventMessage: ${eventMessage}`);

//6、点击开始完后会首先回调这里进行开始:

//配置启动语音模块

let res = await audioCapturerUtils.init((buffer: ArrayBuffer) => {

console.log("获取的音频数据:" + buffer.byteLength)

//接收到录音数据流,这里需要只接收1280byte数据。

let bufferSize = 1280

let validData = new Uint8Array(buffer.slice(0, bufferSize));

// this.asrEngine.writeAudio(this.sessionId, validData);

asrEngine.writeAudio("123456", validData)

})

//录音模块准备完成后开始 录音

if (res.getSuccess()) {

console.log("in1fo " + res.getMsg())

audioCapturerUtils.start()

}

},

// 事件回调

onEvent(sessionId: string, eventCode: number, eventMessage: string) {

console.info(`onEvent, sessionId: ${sessionId} eventCode: ${eventCode} eventMessage: ${eventMessage}`);

},

// 识别结果回调,包括中间结果和最终结果

onResult(sessionId: string, result: speechRecognizer.SpeechRecognitionResult) {

console.info(`onResult, sessionId: ${sessionId} sessionId: ${JSON.stringify(result)}`);

// this.recognizeText = result.result -- 这里数据无法对更新到ui

//7、需要使用发送到主线程

emitter.emit("recognizeText",{data:result.result})

},

// 识别完成回调

onComplete(sessionId: string, eventMessage: string) {

console.info(`onComplete, sessionId: ${sessionId} eventMessage: ${eventMessage}`);

audioCapturerUtils.stop()

},

// 错误回调,错误码通过本方法返回

// 返回错误码1002200002,开始识别失败,重复启动startListening方法时触发

onError(sessionId: string, errorCode: number, errorMessage: string) {

console.error(`onError, sessionId: ${sessionId} errorCode: ${errorCode} errorMessage: ${errorMessage}`);

},

}

return setListener

}

build() {

Text(`识别:${this.recognizeText}`)

.fontSize(20)

.fontColor(Color.Black)

.fontWeight(FontWeight.Bold)

.textAlign(TextAlign.Center)

Button("语音转文字 - 关")

.fontSize(20)

.fontColor(Color.White)

.fontWeight(FontWeight.Bold)

.onClick(() => {

//手动停止

asrEngine.finish(this.languageQuery.sessionId)

audioCapturerUtils.stop()

})

Button("语音转文字 - 开")

.fontSize(20)

.fontColor(Color.White)

.fontWeight(FontWeight.Bold)

.onClick(() => {

// 设置开始识别相关参数

let recognizerParams: speechRecognizer.StartParams = {

sessionId: '123456',

audioInfo: {

audioType: 'pcm',

sampleRate: 16000,

soundChannel: 1,

sampleBit: 16

}

}

//4、开始识别

asrEngine.startListening(recognizerParams)

this.recognizeText = "开始识别"

})

}

.height('100%')

.width('100%')

}

}

//录音工具类

import { audio } from "@kit.AudioKit";

import { fileIo as fs } from '@kit.CoreFileKit';

import { BusinessError } from '@ohos.base';

import { common } from "@kit.AbilityKit";

import { AuthUtil, OutDTO } from "@yunkss/eftool";

class Options {

offset?: number;

length?: number;

}

const TAG = 'AudioCapturerDemo';

export class AudioCapturerUtils {

bufferSize: number = 0;

audioCapturer: audio.AudioCapturer | undefined = undefined;

//注意,这里一定需要如下配置,配置其他类型会导致语音流变大,无法识别

audioStreamInfo: audio.AudioStreamInfo = {

samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_16000, // 采样率。

channels: audio.AudioChannel.CHANNEL_1, // 通道。

sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE, // 采样格式。

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW // 编码格式。

};

audioCapturerInfo: audio.AudioCapturerInfo = {

source: audio.SourceType.SOURCE_TYPE_MIC, // 音源类型:Mic音频源。根据业务场景配置,参考SourceType。

capturerFlags: 0 // 音频采集器标志。

};

audioCapturerOptions: audio.AudioCapturerOptions = {

streamInfo: this.audioStreamInfo,

capturerInfo: this.audioCapturerInfo

};

file: fs.File | null = null;

readDataCallback: Callback<ArrayBuffer> = (data: ArrayBuffer) => {

console.log("收到信息:" + data)

};

//初始化 音频,将音频数据回调出来

async init(resultCallback: (buffer: ArrayBuffer) => void): Promise<OutDTO<string>> {

// 注意使用需要申请录音权限,也需要在 requestPermissions 进行配置。见附录1

let isAuth = await AuthUtil.checkPermissions('ohos.permission.MICROPHONE');

if (!isAuth) {

let res = await AuthUtil.reqPermissions('ohos.permission.MICROPHONE');

if (res < 0) {

return OutDTO.Error('用户取消授权录音权限~');

}

}

this.audioCapturer = await audio.createAudioCapturer(this.audioCapturerOptions);

if (this.audioCapturer !== undefined) {

// 添加回调音频数据

(this.audioCapturer as audio.AudioCapturer).on('readData', resultCallback);

return OutDTO.OK('初始化音频采集器成功~');

} else {

return OutDTO.Error('初始化音频采集器失败~');

}

}

//开始录音

start() {

if (this.audioCapturer !== undefined) {

let stateGroup = [audio.AudioState.STATE_PREPARED, audio.AudioState.STATE_PAUSED,

audio.AudioState.STATE_STOPPED];

if (stateGroup.indexOf(this.audioCapturer.state.valueOf()) ===

-1) { // 当且仅当状态为STATE_PREPARED、STATE_PAUSED和STATE_STOPPED之一时才能启动采集。

console.error(`${TAG}: start failed`);

return;

}

// 启动采集。

this.audioCapturer.start((err: BusinessError) => {

if (err) {

console.error('Capturer start failed.');

} else {

console.info('Capturer start success.');

}

});

}

}

//停止录音

stop() {

if (this.audioCapturer !== undefined) {

// 只有采集器状态为STATE_RUNNING或STATE_PAUSED的时候才可以停止。

if (this.audioCapturer.state.valueOf() != audio.AudioState.STATE_RUNNING &&

this.audioCapturer.state.valueOf() != audio.AudioState.STATE_PAUSED) {

console.info('Capturer is not running or paused');

return;

}

// 停止采集。

this.audioCapturer.stop((err: BusinessError) => {

if (err) {

console.error('Capturer stop failed.');

} else {

// fs.close(this.file);

console.info('Capturer stop success.');

}

});

}

}

//释放录音

release() {

if (this.audioCapturer !== undefined) {

// 采集器状态不是STATE_RELEASED或STATE_NEW状态,才能release。

if (this.audioCapturer.state.valueOf() === audio.AudioState.STATE_RELEASED ||

this.audioCapturer.state.valueOf() === audio.AudioState.STATE_NEW) {

console.info('Capturer already released');

return;

}

// 释放资源。

this.audioCapturer.release((err: BusinessError) => {

if (err) {

console.error('Capturer release failed.');

} else {

console.info('Capturer release success.');

}

});

}

}

}

export const audioCapturerUtils = new AudioCapturerUtils()

真机演示:

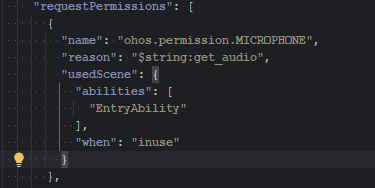

附录1 – 添加权限

您好,在线语音识别转文字接口是Core Speech Kit,

语音识别支持的语种类型只有中文普通话。

文档在https://developer.huawei.com/consumer/cn/doc/harmonyos-guides/speechrecognizer-guide

个人开发者可用,且拥有详细的开发步骤与开发实例进行参考。希望可以帮到你。

Core Speech Kit 只支持离线语音识别,而且仅限中文普通话。

所以我更推荐用 sherpa-onnx/harmony-os at master · k2-fsa/sherpa-onnx,支持流式语音识别,响应更快,而且可以根据你的需求选择合适的模型进行端侧部署。

你需要的应该是:Core Speech Kit

功能:包括文本转语音(TextToSpeech)及语音识别(SpeechRecognizer)能力,便于用户与设备进行互动,实现将实时输入的语音与文本之间相互转换

支持的设备:Phone、Tablet、PC/2in1

支持的国家/地区:仅适用于中国境内(不包含中国香港、中国澳门、中国台湾)

- 支持的语种类型:中文、英文。(简体中文、繁体中文、中文语境下的英文)

- 支持的音色类型:聆小珊女声音色、英语(美国)劳拉女声音色、凌飞哲男声音色。

- 文本长度:不超过10000字数。

你所说的应该是 Core Speech Kit

参考代码

import { speechRecognizer } from '@kit.CoreSpeechKit';

import { BusinessError } from '@kit.BasicServicesKit';

import { fileIo } from '@kit.CoreFileKit';

import { PromptAction } from '@kit.ArkUI';

import FileCapturer from './FileCapturer';

const TAG = 'AsrDemo';

let asrEngine: speechRecognizer.SpeechRecognitionEngine;

@Entry

@Component

struct Index {

@State createCount: number = 0;

@State result: boolean = false;

@State voiceInfo: string = "";

@State sessionId: string = "123456";

@State sessionId2: string = "1234567";

@State generatedText: string = "Default Text";

@State uiContext: UIContext = this.getUIContext()

@State promptAction: PromptAction = this.uiContext.getPromptAction();

private mFileCapturer = new FileCapturer();

build() {

Column() {

Scroll() {

Column() {

Row() {

Column() {

Text(this.generatedText)

.fontColor($r('sys.color.ohos_id_color_text_secondary'))

}

.width('100%')

.constraintSize({ minHeight: 100 })

.border({ width: 1, radius: 5 })

.backgroundColor('#d3d3d3')

.padding(20)

.alignItems(HorizontalAlign.Start)

}

.width('100%')

.padding({ left: 20, right: 20, top: 20, bottom: 20 })

Button() {

Text("CreateEngineByCallback")

.fontColor(Color.White)

.fontSize(20)

}

.type(ButtonType.Capsule)

.backgroundColor("#0x317AE7")

.width("80%")

.height(50)

.margin(10)

.onClick(async () => {

this.createByCallback();

this.createCount++;

console.info(TAG, `CreateAsrEngine:createCount:${this.createCount}`);

await this.sleep(500);

this.setListener();

this.promptAction.showToast({

message: 'CreateEngine succeeded!',

duration: 2000

});

})

Button() {

Text("startRecording")

.fontColor(Color.White)

.fontSize(20)

}

.type(ButtonType.Capsule)

.backgroundColor("#0x317AE7")

.width("80%")

.height(50)

.margin(10)

.onClick(() => {

this.startRecording();

this.promptAction.showToast({

message: 'start Recording',

duration: 2000

});

})

Button() {

Text("audioToText")

.fontColor(Color.White)

.fontSize(20)

}

.type(ButtonType.Capsule)

.backgroundColor("#0x317AE7")

.width("80%")

.height(50)

.margin(10)

.onClick(() => {

this.audioToText();

this.promptAction.showToast({

message: 'start audioToText',

duration: 2000

});

})

Button() {

Text("queryLanguagesCallback")

.fontColor(Color.White)

.fontSize(20)

}

.type(ButtonType.Capsule)

.backgroundColor("#0x317AE7")

.width("80%")

.height(50)

.margin(10)

.onClick(() => {

try{

this.queryLanguagesCallback();

this.promptAction.showToast({

message: 'queryLanguages succeeded!',

duration: 2000

});

} catch (err) {

this.generatedText = `Failed to query language information. message: ${err.message}.`

this.promptAction.showToast({

message: 'queryLanguages failed!',

duration: 2000

});

}

})

Button() {

Text("shutdown")

.fontColor(Color.White)

.fontSize(20)

}

.type(ButtonType.Capsule)

.backgroundColor("#0x317AA7")

.width("80%")

.height(50)

.margin(10)

.onClick(() => {

// 释放引擎

try{

asrEngine.shutdown();

this.generatedText = `The engine has been released.`

this.promptAction.showToast({

message: 'shutdown succeeded!',

duration: 2000

});

} catch (err) {

this.generatedText = `Failed to release engine. message: ${err.message}.`

this.promptAction.showToast({

message: 'shutdown failed!',

duration: 2000

});

}

})

}

.layoutWeight(1)

}

.width('100%')

.height('100%')

}

}

// 创建引擎,通过callback形式返回

private createByCallback() {

// 设置创建引擎参数

let extraParam: Record<string, Object> = {"locate": "CN", "recognizerMode": "short"};

let initParamsInfo: speechRecognizer.CreateEngineParams = {

language: 'zh-CN',

online: 1,

extraParams: extraParam

};

// 调用createEngine方法

speechRecognizer.createEngine(initParamsInfo, (err: BusinessError, speechRecognitionEngine:

speechRecognizer.SpeechRecognitionEngine) => {

if (!err) {

console.info(TAG, 'succeeded in creating engine.');

// 接收创建引擎的实例

asrEngine = speechRecognitionEngine;

} else {

// 无法创建引擎时返回错误码1002200001,原因:语种不支持、模式不支持、初始化超时、资源不存在等导致创建引擎失败

// 无法创建引擎时返回错误码1002200006,原因:引擎正在忙碌中,一般多个应用同时调用语音识别引擎时触发

// 无法创建引擎时返回错误码1002200008,原因:引擎已被销毁

console.error(TAG, `Failed to create engine. Message: ${err.message}.`);

}

});

}

// 查询语种信息,以callback形式返回

private queryLanguagesCallback() {

// 设置查询相关参数

let languageQuery: speechRecognizer.LanguageQuery = {

sessionId: this.sessionId

};

// 调用listLanguages方法

asrEngine.listLanguages(languageQuery, (err: BusinessError, languages: Array<string>) => {

if (!err) {

// 接收目前支持的语种信息

console.info(TAG, `succeeded in listing languages, result: ${JSON.stringify(languages)}`);

this.generatedText = `languages result: ${JSON.stringify(languages)}`

} else {

console.error(TAG, `Failed to create engine. Message: ${err.message}.`);

this.generatedText = `Failed to create engine. Message: ${err.message}.`

}

});

};

private startListeningForRecording() {

let audioParam: speechRecognizer.AudioInfo = { audioType: 'pcm', sampleRate: 16000, soundChannel: 1, sampleBit: 16 } //audioInfo参数配置请参考AudioInfo

let extraParam: Record<string, Object> = {

"recognitionMode": 0,

"vadBegin": 2000,

"vadEnd": 3000,

"maxAudioDuration": 20000

}

let recognizerParams: speechRecognizer.StartParams = {

sessionId: this.sessionId,

audioInfo: audioParam,

extraParams: extraParam

}

console.info(TAG, 'startListening start');

asrEngine.startListening(recognizerParams);

};

// 写音频流

private async audioToText() {

try {

this.setListener();

// Set the parameters related to the start of identification.

let audioParam: speechRecognizer.AudioInfo = { audioType: 'pcm', sampleRate: 16000, soundChannel: 1, sampleBit: 16 }

let recognizerParams: speechRecognizer.StartParams = {

sessionId: this.sessionId2,

audioInfo: audioParam

}

// Invoke the start recognition method.

asrEngine.startListening(recognizerParams);

// Get Audio from File

let data: ArrayBuffer;

let ctx = this.getUIContext().getHostContext() as Context;

let filenames: string[] = fileIo.listFileSync(ctx.resourceDir);

if (filenames.length <= 0) {

console.error('length is null');

return;

}

let filePath: string = ctx.resourceDir + '/' + filenames[0];

(this.mFileCapturer as FileCapturer).setFilePath(filePath);

this.mFileCapturer.init((dataBuffer: ArrayBuffer) => {

data = dataBuffer

let uint8Array: Uint8Array = new Uint8Array(data);

asrEngine.writeAudio(this.sessionId2, uint8Array);

});

await this.mFileCapturer.start();

this.mFileCapturer.release();

} catch (err) {

this.generatedText = `Message: ${err.message}.`

}

}

// 麦克风语音转文本

private async startRecording() {

try {

this.startListeningForRecording();

} catch (err) {

this.generatedText = `Message: ${err.message}.`;

}

};

// 睡眠

private sleep(ms: number):Promise<void> {

return new Promise(resolve => setTimeout(resolve, ms));

}

// 设置回调

private setListener() {

// 创建回调对象

let setListener: speechRecognizer.RecognitionListener = {

// 开始识别成功回调

onStart: (sessionId: string, eventMessage: string) => {

this.generatedText = '';

console.info(TAG, `onStart, sessionId: ${sessionId} eventMessage: ${eventMessage}`);

},

// 事件回调

onEvent(sessionId: string, eventCode: number, eventMessage: string) {

console.info(TAG, `onEvent, sessionId: ${sessionId} eventCode: ${eventCode} eventMessage: ${eventMessage}`);

},

// 识别结果回调,包括中间结果和最终结果

onResult: (sessionId: string, result: speechRecognizer.SpeechRecognitionResult) => {

console.info(TAG, `onResult, sessionId: ${sessionId} result: ${JSON.stringify(result)}`);

this.generatedText = result.result;

},

// 识别完成回调

onComplete(sessionId: string, eventMessage: string) {

console.info(TAG, `onComplete, sessionId: ${sessionId} eventMessage: ${eventMessage}`);

},

// 错误回调,错误码通过本方法返回

// 返回错误码1002200002,开始识别失败,重复启动startListening方法时触发

// 更多错误码请参考错误码参考

onError(sessionId: string, errorCode: number, errorMessage: string) {

console.error(TAG, `onError, sessionId: ${sessionId} errorCode: ${errorCode} errorMessage: ${errorMessage}`);

},

}

// 设置回调

asrEngine.setListener(setListener);

};

}

文档地址

华为在线语音识别转文字接口支持中文普通话和英语两种语言。相关文档可在华为开发者联盟官网的HarmonyOS SDK文档中查找,搜索“语音识别”即可。个人开发者可以使用该服务,需注册华为开发者账号并完成实名认证。ArkTS开发文档可在HarmonyOS应用开发指南的“媒体”部分找到语音识别相关示例代码。

华为在线语音识别服务(Speech Recognition)在HarmonyOS Next中支持多种语言,包括中文普通话、英文、以及部分方言(如粤语、四川话)和主流外语(如日语、西班牙语等)。具体支持的语言列表及更新信息,请参考官方文档中的“支持语种”部分。

文档可通过华为开发者联盟官网(developer.huawei.com)获取,搜索“语音识别服务”或“Speech Recognition”即可找到详细的API参考、开发指南和示例代码。个人开发者可以免费使用基础功能,但部分高级功能或高并发场景可能需要按量付费,具体参见官网定价说明。

针对ArkTS,官方提供了HarmonyOS应用开发的完整文档和示例,包括语音识别接口的调用方法。在文档中搜索“ArkTS语音识别示例”或查看GitHub上的HarmonyOS示例仓库,可找到相关代码实现。