Python图片下载代码分享与实现方法

#!/usr/bin/env python

# -*- coding:utf-8 -*-

import requests

import re

import os

def getHTMLText(url):

headers = {“User-Agent”:“Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36”}

try:

r = requests.get(url,headers=headers)

r.raise_for_status()

return r.text

except requests.exceptions.RequestException as e:

print(e)

def getURLList(html):

regex = r"( http(s?):)([/|.|\w|\s|-])*.(?:jpg|gif|png)"

lst = []

matches = re.finditer(regex, html, re.MULTILINE)

for x,y in enumerate(matches):

try:

lst.append(str(y.group()))

except:

continue

return sorted(set(lst),key = lst.index)

def download(lst,filepath=‘img’):

if not os.path.isdir(filepath):

os.makedirs(filepath)

filecounter = len(lst)

filenow = 1

for url in lst:

headers = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36"}

filename = filepath +'/' + url.split('/')[-1]

with open(filename,'wb') as f :

try:

img = requests.get(url,headers=headers)

img.raise_for_status()

print("Downloading {}/{} file name:{}".format(filenow,filecounter,filename.split('/')[-1]))

filenow += 1

f.write(img.content)

f.flush()

f.close()

print("{} saved".format(filename))

except requests.exceptions.RequestException as e:

print(e)

continue

if name == ‘main’:

url = input(‘please input the image url:’)

filepath = input(‘please input the download path:’)

html = getHTMLText(url)

lst = getURLList(html)

download(lst,filepath)

需要 requests 库

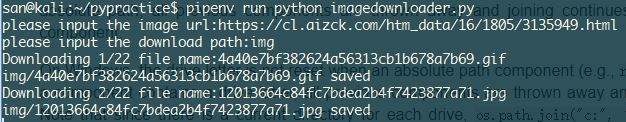

运行效果

Python图片下载代码分享与实现方法

urllib.urlretrieve 这个下图片不错 你试试

我来分享一个实用的Python图片下载代码,包含两种实现方法。

方法1:使用requests库(推荐)

import requests

import os

def download_image_requests(url, save_path, filename=None):

"""

使用requests库下载图片

参数:

url: 图片URL

save_path: 保存目录

filename: 保存文件名(可选,默认使用URL中的文件名)

"""

try:

# 创建保存目录

os.makedirs(save_path, exist_ok=True)

# 发送请求

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'

}

response = requests.get(url, headers=headers, stream=True, timeout=10)

response.raise_for_status()

# 确定文件名

if not filename:

if 'Content-Disposition' in response.headers:

# 从响应头获取文件名

content_disposition = response.headers['Content-Disposition']

filename = content_disposition.split('filename=')[-1].strip('"\'')

else:

# 从URL提取文件名

filename = url.split('/')[-1].split('?')[0]

if not filename or '.' not in filename:

filename = f"image_{hash(url)}.jpg"

# 完整保存路径

filepath = os.path.join(save_path, filename)

# 保存图片

with open(filepath, 'wb') as f:

for chunk in response.iter_content(chunk_size=8192):

if chunk:

f.write(chunk)

print(f"图片已保存到: {filepath}")

return filepath

except Exception as e:

print(f"下载失败: {e}")

return None

# 使用示例

if __name__ == "__main__":

# 示例URL

image_url = "https://example.com/image.jpg"

download_image_requests(image_url, "./downloads")

方法2:使用urllib库(Python内置)

import urllib.request

import os

def download_image_urllib(url, save_path, filename=None):

"""

使用urllib库下载图片(无需安装额外库)

"""

try:

os.makedirs(save_path, exist_ok=True)

if not filename:

filename = url.split('/')[-1].split('?')[0]

if not filename or '.' not in filename:

filename = f"image_{hash(url)}.jpg"

filepath = os.path.join(save_path, filename)

# 设置请求头

opener = urllib.request.build_opener()

opener.addheaders = [('User-Agent', 'Mozilla/5.0')]

urllib.request.install_opener(opener)

# 下载并保存

urllib.request.urlretrieve(url, filepath)

print(f"图片已保存到: {filepath}")

return filepath

except Exception as e:

print(f"下载失败: {e}")

return None

# 使用示例

if __name__ == "__main__":

image_url = "https://example.com/image.jpg"

download_image_urllib(image_url, "./downloads")

两种方法对比:

requests方法更灵活,支持流式下载、超时设置、自定义头部等urllib是Python标准库,无需安装第三方包- 对于大文件下载,requests的

stream=True模式更节省内存

简单建议: 日常使用推荐requests库,功能更全面。

#1 去做饭了,等吃完饭改一下。 小应用 urllib 更方便一些,不用装依赖库。

没考虑中文文件名图片吧

需要 urldecode 一下

另外要不要处理文件名中的特殊符号 可能不能作为文件名的 url?

下载之类的,我觉得还是调用 aira2 来下载比较好,aria2 可以保证下载内容的完整性。如果用 python 模块下载的话,当遇到网络问题或者报错的时候,下载的内容可能不是完整的了。

#1 urlretrieve 下载图片坑多。图片模糊、打不开等等python<br>#!/usr/bin/env python<br># -*- coding:utf-8 -*-<br>from urllib.request import Request,urlopen,urlretrieve<br>from urllib.error import HTTPError<br>import re<br>import os<br><br>def getHTMLText(url):<br> headers = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36"}<br> req = urllib.request.Request(url=url,headers=headers)<br> try:<br> with urllib.request.urlopen(req) as f:<br> return f.read().decode('utf-8')<br> except HTTPError as e:<br> print('Error code:',e.code)<br><br>def getURLList(html):<br> regex = r"( http(s?):)([/|.|\w|\s|-])*\.(?:jpg|gif|png)"<br> lst = []<br> matches = re.finditer(regex, html, re.MULTILINE)<br> for x,y in enumerate(matches):<br> try:<br> lst.append(str(y.group()))<br> except:<br> continue<br> return sorted(set(lst),key = lst.index)<br><br>def download(lst,filepath='img'):<br> if not os.path.isdir(filepath):<br> os.makedirs(filepath)<br><br> filecounter = len(lst)<br> filenow = 1<br> for url in lst:<br> filename = filepath +'/' + url.split('/')[-1]<br> opener = urllib.request.build_opener()<br> opener.addheaders = [("User-Agent","Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36")]<br> urllib.request.install_opener(opener)<br> urllib.request.urlretrieve(url,filename)<br><br><br>if __name__ == '__main__':<br> url = input('please input the image url:')<br> filepath = input('please input the download path:')<br> html = getHTMLText(url)<br> lst = getURLList(html)<br> download(lst,filepath)<br><br>

#3 网页地址中出现中文文件名的情况很少吧,想加 encode 处理起来慢。至于特殊字符作为文件名,网页中都能解析,本地系统应该可以吧。现在遇到问题很少。等出现了再处理?

#4 只是下载图片 python 够用了,再安装 aria2 就麻烦了。你总不会想把一级棒全站图片下载下来吧?

恩 我也发现了看着图片不完整,但是打开了图确是全的,奇怪了。。

cl 的下载方式很恶心,你写个下载的利器吧

确定文件名之前还是过滤一下为好

def safefilename(filename):

“”"

convert a string to a safe filename

:param filename: a string, may be url or name

:return: special chars replaced with

“”"

for i in “\/:*?”<>|$":

filename=filename.replace(i,"")

return filename