HarmonyOS 鸿蒙Next中调用getMaxAmplitudeForInputDevice实现音频振幅问题

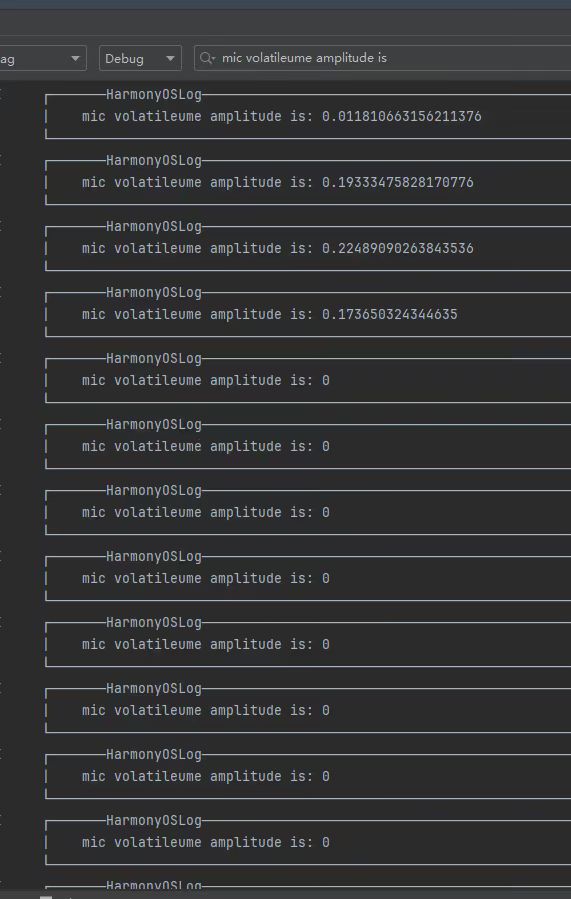

HarmonyOS 鸿蒙Next中调用getMaxAmplitudeForInputDevice实现音频振幅问题 用AudioCapturer去做音频录制的时候 ,根据getMaxAmplitudeForInputDevice去做实时监听麦克风音量大小,但是会在录10-30秒后,日志拿到的值为0

设备:purax 6.0.0 / pura70 5.1.0 两台手机效果一样

HarmonyOS 5.0.5 Release SDK,原样包含OpenHarmony SDK Ohos_sdk_public 5.0.5.165 (API Version 17 Release)

日志如下:

demo: (操作步骤:申请权限后,先点击播放振幅 过个几秒再点击开始录音)

import { audio } from '@kit.AudioKit';

import { abilityAccessCtrl, common, Permissions } from '@kit.AbilityKit';

import { BusinessError } from '@kit.BasicServicesKit';

export class WebSocketAudioCapturer {

audioCapturer: audio.AudioCapturer | null = null;

isRecording: boolean = false;

// 初始化音频记录器

initialize(): Promise<void> {

return new Promise((resolve, reject) => {

const streamInfo: audio.AudioStreamInfo = {

samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_16000,

channels: audio.AudioChannel.CHANNEL_1,

sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE,

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW

};

const audioCapturerInfo: audio.AudioCapturerInfo = {

source: audio.SourceType.SOURCE_TYPE_MIC,

capturerFlags: 0

};

const audioCapturerOptions: audio.AudioCapturerOptions = {

streamInfo: streamInfo,

capturerInfo: audioCapturerInfo

};

audio.createAudioCapturer(audioCapturerOptions, (err, capturer) => {

if (err) {

reject(`创建音频记录器失败: ${err.message}`);

return;

}

this.audioCapturer = capturer;

resolve();

});

});

}

// 开始录音

startRecording(readDataCallback: (buffer: ArrayBuffer) => void): Promise<void> {

return new Promise((resolve, reject) => {

if (!this.audioCapturer) {

reject('音频记录器未初始化');

return;

}

const stateGroup = [

audio.AudioState.STATE_PREPARED,

audio.AudioState.STATE_PAUSED,

audio.AudioState.STATE_STOPPED

];

if (!stateGroup.includes(this.audioCapturer.state.valueOf())) {

reject('音频记录器状态错误');

return;

}

// 设置数据到达回调

this.audioCapturer.on('readData', readDataCallback);

this.audioCapturer.start((err: BusinessError) => {

if (err) {

reject(`开始录音失败: ${err.message}`);

} else {

this.isRecording = true;

resolve();

}

});

});

}

// 停止录音

stopRecording(): Promise<void> {

return new Promise((resolve, reject) => {

if (!this.audioCapturer || !this.isRecording) {

resolve();

return;

}

if (![audio.AudioState.STATE_RUNNING,

audio.AudioState.STATE_PAUSED].includes(this.audioCapturer.state.valueOf())) {

resolve();

return;

}

this.audioCapturer.stop((err: BusinessError) => {

if (err) {

reject(`停止录音失败: ${err.message}`);

} else {

this.isRecording = false;

console.log('停止录音成功');

resolve();

}

});

});

}

// 释放资源

release(): void {

if (this.audioCapturer) {

if (![audio.AudioState.STATE_RELEASED, audio.AudioState.STATE_NEW].includes(this.audioCapturer.state.valueOf())) {

this.audioCapturer.release();

}

this.audioCapturer = null;

}

}

}

@Entry

@Component

struct test {

private audioCapturer: WebSocketAudioCapturer = new WebSocketAudioCapturer();

@State waveHeights: number[] = []; // 当前显示的波形高度数组

@State historyAmplitudes: number[] = []; // 历史振幅值

private audioManager: audio.AudioManager = audio.getAudioManager();

private audioVolumeManager: audio.AudioVolumeManager = this.audioManager.getVolumeManager();

private groupId: number = audio.DEFAULT_VOLUME_GROUP_ID;

private audioVolumeGroupManager: audio.AudioVolumeGroupManager | undefined = this.audioVolumeManager.getVolumeGroupManagerSync(this.groupId);

private capturerInfo: audio.AudioCapturerInfo = {

source: audio.SourceType.SOURCE_TYPE_MIC,

capturerFlags: 0

};

private maxWaveCount: number = 0; // 根据屏幕宽度计算的最大波形数量

private waveContainerWidth: number = 0; // 波形容器宽度

setIntervalVolume: number = -1; // 添加定时器ID变量

startPlay(){

this.setIntervalVolume = setInterval(() => {

this.getVolume()

}, 200)

}

async requestPermissions(permissions: Permissions[]) {

//创建应用权限管理器

const atManager = abilityAccessCtrl.createAtManager()

// 2. 向用户申请 user_grant 权限(温馨提示:首次申请时会弹窗,后续申请则不会再出现弹窗)

const requestResult = await atManager.requestPermissionsFromUser(

getContext(), // 应用上下文

permissions) // 参数:权限列表(数组)

// 通过 every 检查权限是否都成功授权

const isAuth = requestResult.authResults.every(item => item === abilityAccessCtrl.GrantStatus.PERMISSION_GRANTED)

//通过Promise.reject() 返回一个 Promise让await后续的代码不被执行,Promise.reject可被 catch 捕获到,当然也可以使用if

return isAuth === true ? Promise.resolve(true) : Promise.reject(false)

}

aboutToAppear(): void {

this.calculateWaveCount()

}

build() {

Column({space:10}) {

Button('麦克风授权')

.onClick(async () => {

const result = this.requestPermissions(['ohos.permission.MICROPHONE']);

})

Button('开始录音').onClick(async () => {

// 初始化音频录制器

await this.audioCapturer.initialize();

// 开始录制

await this.audioCapturer.startRecording((buffer: ArrayBuffer) => {

});

})

//先点击开始播放振幅 过3-5秒点击开始录音

Button('开始播放振幅').onClick(() => {

this.startPlay()

})

this.WaveForm()

}

.width('100%')

.height('100%')

.justifyContent(FlexAlign.Center)

}

getVolume() {

/* let audioManager = audio.getAudioManager();

let audioVolumeManager: audio.AudioVolumeManager = audioManager.getVolumeManager();

let groupId: number = audio.DEFAULT_VOLUME_GROUP_ID;

let audioVolumeGroupManager: audio.AudioVolumeGroupManager | undefined = undefined;

audioVolumeGroupManager = audioVolumeManager.getVolumeGroupManagerSync(groupId);

// Log.info('Promise returned to indicate that the volume group infos list is obtained.');

let capturerInfo: audio.AudioCapturerInfo = {

source: audio.SourceType.SOURCE_TYPE_MIC,

capturerFlags: 0

};*/

audio.getAudioManager()

.getRoutingManager()

.getPreferredInputDeviceForCapturerInfo(this.capturerInfo)

.then((data) => {

this.audioVolumeGroupManager?.getMaxAmplitudeForInputDevice(data[0]).then((value) => {

// 使用新音量值更新波形

this.updateWaveHeightsWithAmplitude(value);

console.info(`mic volatileume amplitude is: ${value}`);

}).catch((err: BusinessError) => {

console.error("getMaxAmplitudeForInputDevice error" + JSON.stringify(err));

})

})

.catch((err: BusinessError) => {

console.error("get outputDeviceId error" + JSON.stringify(err));

})

}

// 更新波形高度 - 实现实时增加和消失效果

updateWaveHeightsWithAmplitude(amplitude: number) {

// 添加新的振幅值到数组末尾

this.historyAmplitudes.push(amplitude);

// 如果数组长度超过最大数量,移除最旧的值(实现依次消失效果)

if (this.historyAmplitudes.length > this.maxWaveCount) {

this.historyAmplitudes.shift();

}

// 填充缺失的值(如果数组长度不足)

while (this.historyAmplitudes.length < this.maxWaveCount) {

this.historyAmplitudes.unshift(0);

}

// 转换所有历史值为高度

this.waveHeights = this.historyAmplitudes.map(amp => this.convertAmplitudeToHeight(amp));

// console.log('this.waveHeights' + this.waveHeights)

}

convertAmplitudeToHeight(amp: number): number {

// 限制输入值在0-1范围内

let normalizedAmp = Math.min(Math.max(amp, 0), 1);

// 使用指数函数增强小值的变化感

// 对于较小的值(0-0.5),变化更加明显

let enhancedAmp = Math.pow(normalizedAmp, 0.5); // 平方根增强小值

// 将增强后的值映射到5-50范围

return 5 + Math.round(enhancedAmp * (50 - 5));

}

// 计算根据屏幕宽度能显示的波形数量

calculateWaveCount() {

// 假设每个波形条宽度为4v,间距为2vp

const waveItemWidth = 6; // 波形条宽度+间距

this.waveContainerWidth = 300; // 默认容器宽度,实际应根据布局获取

this.maxWaveCount = Math.floor(this.waveContainerWidth / waveItemWidth);

// 初始化波形高度数组

this.waveHeights = new Array(this.maxWaveCount).fill(5);

this.historyAmplitudes = new Array(this.maxWaveCount).fill(0);

}

@Builder

WaveForm() {

Row() {

ForEach(this.waveHeights, (height: number, index: number) => {

Column()

.width(2)

.height(height)

.backgroundColor('#9586FB')

.animation({

duration: 100,

curve: Curve.EaseInOut

})

})

}

.width('100%')

.padding({ left: 16, right: 16 })

.height(50)

.justifyContent(FlexAlign.SpaceAround)

}

}

更多关于HarmonyOS 鸿蒙Next中调用getMaxAmplitudeForInputDevice实现音频振幅问题的实战教程也可以访问 https://www.itying.com/category-93-b0.html

尊敬的开发者,您好,问题具体原因正在分析中。目前可通过将获取音量大小的频率设置为200以下进行规避,比如190。感谢您的理解与支持。

更多关于HarmonyOS 鸿蒙Next中调用getMaxAmplitudeForInputDevice实现音频振幅问题的实战系列教程也可以访问 https://www.itying.com/category-93-b0.html

尊敬的开发者,您好!感谢您的反馈,问题正在加速处理中,还请关注后续版本,感谢您的理解与支持。

在HarmonyOS Next中,getMaxAmplitudeForInputDevice用于获取指定音频输入设备的实时最大振幅值。该方法通过AudioManager调用,需传入有效的音频设备ID参数。返回值为整数类型,表示当前输入音频信号的峰值振幅,范围取决于设备硬件支持。调用前需确保已获取相应音频权限,并确认输入设备处于活跃状态。该接口适用于需要监测音频输入强度的场景,如音量可视化或音频触发应用。

在HarmonyOS Next中,getMaxAmplitudeForInputDevice返回0的问题通常与音频资源管理相关。从代码分析,问题可能出现在以下几个方面:

-

音频资源冲突:当AudioCapturer开始录音后,系统可能将音频输入设备资源分配给录音器,导致

getMaxAmplitudeForInputDevice无法获取有效的音频数据流。 -

设备独占性:某些音频输入设备在HarmonyOS中具有独占性,当AudioCapturer占用麦克风后,其他音频查询接口可能无法同时访问设备状态。

建议的解决方案:

// 在开始录音前停止振幅监测

async startRecording(readDataCallback: (buffer: ArrayBuffer) => void): Promise<void> {

if (this.setIntervalVolume !== -1) {

clearInterval(this.setIntervalVolume);

this.setIntervalVolume = -1;

}

// 原有录音逻辑

await this.audioCapturer.initialize();

await this.audioCapturer.startRecording(readDataCallback);

}

// 在录音停止后重新开启振幅监测

async stopRecording(): Promise<void> {

await this.audioCapturer.stopRecording();

// 延迟一段时间再重新开启振幅监测

setTimeout(() => {

this.startPlay();

}, 100);

}

另外,考虑使用AudioCapturer的readData回调中的音频数据直接计算振幅,这样可以避免设备资源冲突:

// 在readData回调中计算实时振幅

private calculateAmplitudeFromBuffer(buffer: ArrayBuffer): number {

const int16Array = new Int16Array(buffer);

let sum = 0;

for (let i = 0; i < int16Array.length; i++) {

sum += Math.abs(int16Array[i]);

}

const average = sum / int16Array.length;

// 将平均值归一化到0-1范围

return Math.min(average / 32768, 1);

}

这种方法可以确保在录音过程中持续获取有效的振幅数据,而不会出现返回0的情况。